Improving the way you organize your products or content is one of the easiest and most effective ways to expand the number of valuable pages on your site. All things the same, the more pages you have, the more search terms you can rank for, within an overall limit of your site’s authority.

E-commerce, classifieds and listings websites can make use of facets relating to things like product type, price and location. Dynamic pages will then be generated around sets of products to make it easy for customers to find what they need on-site and create pages that could bring in long-tail search traffic. These are often the most important pages on the sites which drive the majority of organic traffic. Examples of these facets include:

- Product types: size, style, price, colour, material, brand, manufacturer

- Classifieds/listings types: location, price, salary

Most websites that publish regular content like blog posts or news articles can make use of the following page groups. These pages offer an additional opportunity for extra search traffic in the long-term if they are carefully managed, since the tag and category pages can rank in their own right:

- Categories / sub-categories / channels: very broad topic pages. Should be linked from your main navigation.

- Tags: more specific than categories, broader than individual pages. Can be used to provide traffic avenues into sets of blog posts that can get lost in broad channel pages. Should not be used on their own without a category also being applied to the same content (to avoid new content being buried too deep into the site). Should be linked from each tagged piece of content and in some cases can be used to dynamically link related posts on the same topic.

- Authors: only really useful if you want reporters, bloggers or content editors to ‘own’ their content. Should be linked from each ‘owned’ piece of content.

These facets can also be combined to allow for even more specific searches (eg. women’s red shirts size 10; admin jobs in north west London).

Beware of ‘too much choice’

A small set of high-quality, well-used and easily-maintained tags/facets/categories is much more valuable than a large set of lower-quality ones that will essentially become duplicates of each other, since the same content will be added to each one.

Good: facet titles that encompass one product/page group each and form clear links to specific sections of your site:

- Red evening dresses

- Blogging advice and best practice

Bad: lots of facets that essentially mean the same thing, based around specific keywords:

- Red evening dresses, red occasion dresses, red dresses for evening, red dresses for occasions

- Blog, blogger, blogging, blogging tips, blogging advice, blogging best practice

While duplicate content might not be such a disastrous problem for search performance (as Google should be able to decide on the best one to index), a large number of similar pages reduces your crawl efficiency, causes confusion for users about the best way to navigate your site, and splits ranking/social/engagement signals across several pages.

This problem also makes life more difficult for your editors, who have to remember to add a lot of very similar classifications whenever they upload a product or post. If they miss any, you’ll get gaps of good content in each one, making all of those pages weaker and thinner as a result. It’s at this point you risk a Panda penalty; every tag or facet you create should be valuable, unique and interesting to your users.

A quick word on pagination

The more content or products you add to a tag/facet, the more paginated pages you’ll create. The number of pages is usually defined by the number of data items, plus the number of pages listing/displaying links to those data items.

Remember to make sure your paginated pages are marked up correctly with valid rel next/prev tags so that Google knows to index page one.

How to clean, build and future-proof your taxonomies with help from DeepCrawl

Step 1: Clean-up existing pages

Assess performance of existing tags/categories/facets

Create a list of the taxonomy pages that currently bring in traffic using your DeepCrawl report (link your project with Google Analytics, run a Universal Crawl and then view Universal > Analytics > Organic Landing Pages) and Google Search Console Indexed Pages (in Search Console > Search Traffic > Search Analytics).

You can also check for low-quality pages that do not bring in any organic traffic in DeepCrawl’s Missing in Organic Landing Pages report. Consider whether to de-index, canonicalise or delete any tag pages that do not bring in any traffic (your decision about what to do with them should be based on their on-site value).

You may also want to use the data on your most successful pages to inform your decisions about new classifications to create.

Check for existing pagination issues

Check your set-up of rel next/prev before adding any new sets of pages. You can check how paginated pages are linked via your DeepCrawl report in Indexation > Indexable Pages > Paginated Pages and in Content > Pagination > First Pages > Unlinked Paginated Pages.

See our Pagination guide for more information on the correct implementation of pagination.

Optimize crawling efficiency

You should remove duplication now to allow your new expanded architecture to be seen when it goes live. The appropriate use of disallow, canonical, noindex is important here: for more information see our post on the Seven Duplicate Content Issues you Need to Fix Now.

Merge any aliases and delete very under-used (less than five posts attached) or out-of-date classifications that cannot be merged into new ones.

If you’re working on an e-commerce or classifieds/listings site, review your existing facets, including combinations. When looking for pages to de-index, don’t forget sort orders and other filters, which can create new pages by themselves. These pages might be valuable on some sites, but in most instances they should be de-indexed, with a preferred default option being the only one that is indexable.

If you have different currency or country variations, don’t forget to make use of hreflang tags to reduce international duplication.

You can check for duplicate content in your DeepCrawl report: see Content > Body Content > Duplicate Body Content and Content > Titles & Descriptions > Duplicate Titles/Descriptions.

Step 2: Research

Topic research

Now it’s time to gather research on the new classifications you’re missing. Use the traditional keyword research channels and/or internal search data here.

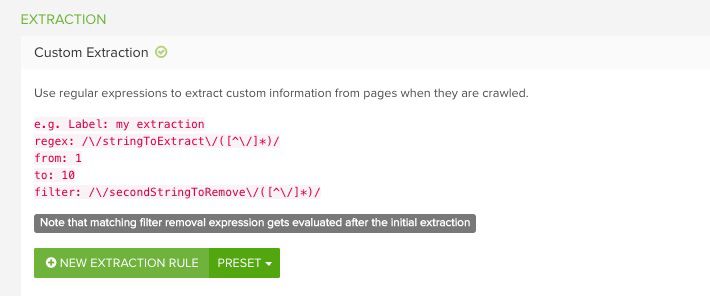

You can also extract topics from your own site and your competitors’ sites using carefully restricted DeepCrawl crawls. You can also use custom extraction to extract keyword data where the meta keywords tag is used.

Take a look at our guide to using custom extraction for more details on how to extract the topics you want.

Remember, focus on topics, not keywords, since the end goal is to create a smaller set of useful pages.

Create your hierarchy

Compare your list of existing pages to your research for differences. The idea here is to identify a hierarchy of topics, from broad to specific, that will complement your existing arsenal of content, products and listings.

This can then be split into categories, subcategories, tags and facets to form your new taxonomy.

Step 3: Create your new pages

How this will work will be based on the type of website you’re working on and your CMS. For example, facets and categories on faceted search websites will usually need to be added by the development team as they have to be processed and connected to the data before they can work.

But whatever type of website you have, add content to your new tags and facets straight away; don’t leave new taxonomies sitting with few or no pages attached to them. It might be easier and more manageable to do the work in batches if you are adding lots of new pages.

If you are concerned about how your new taxonomy will affect your live site, you can use DeepCrawl’s Test vs Live feature to apply them to a staging site first and check for issues with things like pagination, duplicate content, missing titles/descriptions and hreflang tags.

Step 4: Final checks and future-proofing

Check your set-up

Check that all your new pages are linked within the site using DeepCrawl’s Only in Sitemaps report (under Universal > Sitemaps). This will show you all of the pages that are included in a Sitemap, but that are not linked anywhere in the site.

It’s also a good idea to double-check that no pagination issues have crept in during the creation process: use Indexation > Indexable Pages > Paginated Pages and in Content > Pagination > First Pages > Unlinked Paginated Pages.

Future-proof your strategy

To avoid any under-used pages in the future, it’s important to set a minimum threshold for extra classifications to be created. For example, suggest that no new tags are created before there are at least five posts already live about that topic. Reducing this threshold will increase the number of indexable pages, but reduce the average quality of engagement.

Also consider how broad a match between content/product/listing and a tag/facet should be before these elements are linked.

For example, if a dress has blue sleeves, should it be added under a ‘blue dresses’ facet? If a politician is mentioned in an article, should their tag be added to that article?

Relaxing the rules on relevancy may increase the number of indexable pages on the site, without reducing the user engagement (as long as the content is still useful for people interested in that topic). As ever in online marketing, understanding your audience is crucial to avoid spamming people will irrelevant content on their way through the site.

Learn more about site architecture with our webinar and white paper

If you’re interested to explore the topics of taxonomy and site architecture further, then make sure you read our recap of the webinar we held with Jamie Alberico about how to structure your website for success. The recap post includes all the key takeaways, the slides that Jamie presented, as well as a full recording of the session.

Our site architecture white paper also goes into much more detail on creating more user-friendly taxonomies, optimising internal linking and much more, so make sure you give it a read.