Optimizing your site’s crawlability and indexability is fundamental for any technical SEO plan. If search engines cannot easily crawl and index your sites, it will be harder for your content to rank well in the SERPs (and harder for potential customers to find your businesses). As technical SEOs and digital marketers, we need to choose the correct signaling methods to control both crawling and indexing in order to build a strong foundation for our websites.

But these signals are not always straightforward. For example: although blocking a URL from being crawled makes it less likely to be indexed, it doesn’t actually control indexing. Likewise, restricting a page from being indexed doesn’t necessarily prevent it from being crawled in the first place.

Having a clear understanding of which signals control crawling, which control indexing, and how to utilize them in different situations will help ensure a strong technical foundation from which to grow your site. In this article, we will show you how.

This post is part of Lumar (formerly Deepcrawl)’s series on Website Health. In this series, we deep-dive into the core elements of website health that an SEO strategy needs to address in order to give your website content its best chance of ranking well in the search engine results pages (SERPs).

This time, we’re discussing how to implement crawlability and indexability controls throughout your website.

Crawling controls:

Robots.txt

Disallowing a URL or a set of URLs in robots.txt is one of the most effective ways to prevent the crawling of those URLs, due to the fact that Google considers it a directive and not just a hint. Although this isn’t the case for all search engines; for crawlers from search engines other than Google, it’s worth keeping in mind that robots.txt rules can be ignored.

Also worth keeping in mind: more specific rules (both for user agent and folder path) will override the more general rules you set here, so if you disallow all user agents from crawling a set of URLs, but then also specifically allow Googlebot, or Bingbot (or any specific user agent) to crawl the same set of URLs, the more specific rule will be followed by each specified search engine and those particular user agents will crawl the URLs.

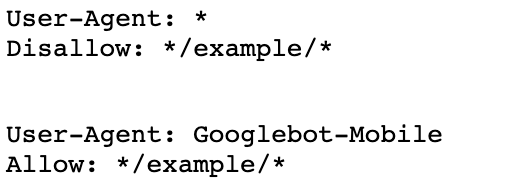

For example, the following robots.txt rules would block all user agents, with the exception of Googlebot-Mobile from crawling */example/*:

You can research more about how Google handles robots.txt rules in their robots.txt specification document – and don’t forget to test before rolling out any robots.txt changes!

Nofollow

Using internal nofollows is another method of having some control over which pages search engines should and should not crawl.

Nofollows, however, are not a “directive”, so search engines will use them instead as a “hint”. Because of this, it may be useful to apply robots.txt blocks to pages that you definitely do not want to be crawled—although internal nofollows are certainly better than nothing when it comes to controlling which URLs are crawled.

It’s also important to make the distinction between page-level nofollows and link-level no follows.

- Page level nofollows are included in the meta robots and are a hint to search engines that no links on that page should be crawled (but this does not stop the page itself from being crawled).

- Link-level nofollows are applied on an individual link basis and are a hint for search engines not to crawl the page that is linked to (via that particular link).

JavaScript

Depending on the method of implementation, some JavaScript cannot be crawled, even when rendered, if it does not utilize the <a> element with an href attribute method of linking in either the HTML or the rendered Domain Object Model.

Although the inability of some JavaScript to be crawled is often discussed as a negative in terms of SEO (as the pages being referenced cannot be crawled or discovered), if it is linking to pages that you do not want to be crawled (for example, non-optimized faceted navigation URLs), this is an option to use JavaScript inaccessibility to an advantage.

Indexing controls:

Noindex

Applying a noindex via a robots meta tag or HTTP response is one of the clearest signals to search engines to either not include a page in their index, or to remove it from the index.

Both of these methods still require a page to be crawled in order for the noindex to be recognized, so you’ll want to make sure that the page is crawlable if you want to make absolutely sure a page stays out of the index (somewhat counterintuitively!).

Search engines will still crawl pages that have a noindex, however. It’s been stated that eventually, Google will start to recognize a long-term noindexed URL as a nofollow. Therefore, although a noindex is not a crawling control, it may eventually end up having that effect. (This is particularly important to note in circumstances such as pagination, where you may not want a paginated series indexed, but will want them crawled so that all your products are available for search engines.)

Canonicals

Canonicals are used to identify the primary version of a page when a set of similar pages exist. Some search engines, such as Google, will assign a canonical version of a page if it’s not specifically set—and can even override a canonical when it is set.

The canonical version of a page is the version that will be indexed, with the non-canonical versions not being indexed (due to not being considered the primary version of that page).

Although canonicals can be used to help control indexing, they have no impact on crawling, so search engines will still crawl URLs that are canonicalized.

A note on robots.txt for index control

Formerly, it was possible to assign noindex to pages via robots.txt. However, this support has since been deprecated. Noindex should not be applied via robots.txt (N.B. this is different from implementing noindex via meta robots, which is still supported).

Password-protected URLs

Utilizing password protection can prevent any pages that are hidden behind the login from being indexed. This can be particularly useful in situations such as migrations, where a staging site is still being worked on and tested, but not yet launched.

Although search engines can, in theory, crawl the URL, it will not be able to crawl any of the content that is hidden behind the login, therefore password-protecting URLs can also prevent crawling.

The caveat to this is if a page was previously not password-protected, and then has a password applied later on, it may still appear in the index as search engines will already have crawled and indexed the page previously.

Combining controls:

It’s important to have a clear understanding of what you aim to achieve when combining controls, as sometimes combining different signals to control crawling and indexing can result in unwanted results.

Combining canonicals & noindex

Including pages with both a canonical and a noindex could be sending contradicting messages to search engines.

This is due to the canonical signal essentially combining the signals of the pages that are canonicalized — therefore, by including a noindex on a canonicalized page, it theoretically can pass on the noindex to the primary page that we actually do want to be ranking and indexed. This was touched upon by John Mueller.

Although there’s no guarantee that a noindex will be applied to the canonical page, it’s worth keeping an eye on pages in this situation to ensure you’re not sending confusing or contradictory signals to search engines.

Combining noindex & robots.txt disallows

Similarly, including both a noindex and a robots.txt disallow on a URL can sometimes cause issues, due to the fact that, if a robots.txt disallow is in place search, engines cannot crawl the URL to see the noindex.

Therefore, if a page has previously been indexed, it may remain in the index despite having a noindex applied. Even if the URL has not been indexed, if an external site is linking to it, it may be indexed despite the noindex because search engines will not crawl the URL to see the noindex (in meta robots or HTTP header).

In these situations, search results will often look like the following:

More information on this scenario can be found in this Google guidelines article.