Back in May, Bartosz Goralewicz spoke on our monthly DeepCrawl (now Lumar) webinar series about all things JavaScript.

Bartosz’s presentation provided so many expert insights that we didn’t have time to get to the questions submitted by our eager-to-learn viewers. To make sure everyone gets their full fix of JavaScript, Bartosz has kindly answered all of your questions here in full.

If you weren’t able to join us for the webinar you can find the recording and key takeaways in our recap post here.

If you want to find out about how you can optimise sites that use JavaScript, then look no further. We’ve launched our JavaScript Rendered Crawling feature that will give you the full picture when crawling sites that rely on JavaScript.

Does the 5 second rule still matter for Googlebot?

Although there is no exact timeout for Googlebot, many experiments (including ours at Elephate) show that Google GENERALLY doesn’t wait more than 5 seconds for a script. I recommend making your scripts as fast as possible.

John Mueller agrees: “5 seconds is a good thing to aim for, I suspect many sites will find it challenging to get there though”

Technical aspects aside, I don’t think this is a discussion worth focusing on. If your website has a script that loads 5 seconds, you are not going to rank anyway.

WordPress themes have a lot of client-side JS, how should you deal with this?

I would approach it the same way as other JS-based websites.

Make sure that Google can see the menu items/content hidden under the tabs. Can Google see the entire content copy or just an excerpt?

It may happen that one of the WordPress plugins causes JavaScript errors in the older browsers (i.e. in Chrome 41 used by Google for website rendering).

Also, if you keep enabling more and more plugins, the process of JS execution may take a lot of time, which will have a negative effect on both Google and users. Apart from JS rendering, it’s generally good practice to use only necessary plugins, in order to keep WordPress lean and mitigate the risk of hacking.

One last thing to remember with plugins and client-side JavaScript in general – it will affect low-end mobile devices or old CPUs to an extent, where your performance may be unacceptable for a lot of your users.

What are some good future steps for an SEO in terms of JS?

It’s clear that JavaScript SEO is getting more and more popular. SEOs need to stay ahead of the curve when it comes to JavaScript. This means a lot of research, experimentation, crunching data, etc. It means knowing how to check if JS-based websites are being rendered properly, whether it’s something as simple as using the “site:” command in Google, downloading and taking advantage of Chrome 41 (the 3-year-old browser Google continues to use for website rendering), Chrome DevTools, Google Mobile-Friendly tester and so on.

It means being able to foresee potential obstacles like timeouts or content that requires user interaction, as I’ll talk about later.

And it means being ready for the next big thing that probably dropped yesterday and you now need to adapt to, whether it’s Google finally updating its rendering browser or one of the countless JavaScript frameworks suddenly becoming popular among developers.

Also, as I mentioned in the webinar, there is that trend Netflix started of reducing client-side JavaScript. Unfortunately I wouldn’t assume it is going to be widely popular over the next few years. If you feel like diving into a more technical article on this topic, I highly recommend this one by Daniel Borowski.

Would you want to update your JS crawl experiments for Chrome 66?

My JavaScript SEO experiments from the very beginning worked well on most modern browsers. Recently, we noticed one of the experiments seems to be not working properly and we’re going to fix it ASAP.

Google has made claims in the past about being able to render JS quite well. So hamburger icons and “read more” buttons shouldn’t be a problem, right?

It’s true that Google is getting better and better at rendering JavaScript. However, it’s still not perfect.

The first rule you should follow is to avoid event-based scenarios. If content or links are injected to the DOM after a click of a button, Google will not pick it up.

Tomek Rudzki covered this topic in his Ultimate Guide to JavaScript SEO:

“If you have an online store and content hidden under a “show more” button is not apparent in the DOM before clicking, it will not be picked up by Google. Important note: It also refers to menu links.”

So, click the right mouse button -> Inspect (or CTRL + Shift + J) and see if the menu items are apparent there. If not, you can be almost sure Google will not pick up your hamburger menus.

How does the second phase of rendering affect the implementation of structured data using Google Tag Manager?

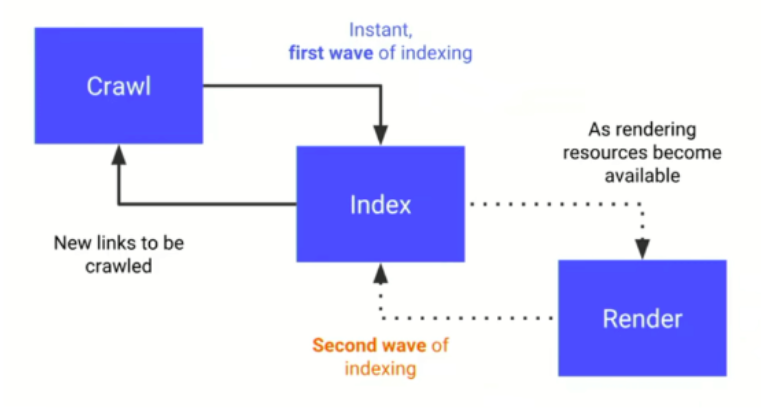

The chances are great that Google will not immediately pick up your dynamically injected markup; this is related to two-phased indexing.

In your case, Google will be able to see your structured data when rendering resources become available.

Diagnosing structured data injected by Google Tag Manager is difficult since Google’s tools are not yet ready for auditing it. The Google Structured Data testing tool struggles with JS. Whereas the Rich Results testing tool can deal with it, but it only supports a few schema types.

How resource-intensive from a development point of view is it to change from client-side JavaScript to server-side JavaScript for a site that has already been launched?

You should ask your developers!

Based on my experience, developers usually don’t struggle with implementing pre-rendering; however, isomorphic JavaScript (client-side + server-side JS), despite its advantages, may be difficult to implement and is considered as a more time-consuming process.

If a site uses 5 unique templates using JS, is it necessary to check issues concerning crawling and rendering for the entire site? Can one get fairly good sense of issues by sample crawling and rendering of these five templates?

Generally, you should be fine with crawling a sample of URLs. Just make sure that the sample is big enough to reflect the whole website.

If your website is relatively small, ie. has 50 pages, then take your time to analyze the whole website. If there are millions of URLs, sampling is OK. For each unique template pick X URLs and analyze them.

The problem you will most likely encounter is that with client-side JavaScript, your website will most likely not rank and Google will crawl it much slower than HTML websites. If you want to read more about this topic, I highly recommend my JavaScript vs. Crawl Budget article.

If one uses React + Webpack to create an isomorphic JS web application to serve pages to crawlers and end-users, what type of issues does one need to worry about in this type of setup where all pages are generated by the server?

Isomorphic JS is the recommended solution, but still, there are a lot of things you should pay attention to:

- Check if Google can see your pagination, menu links, content hidden under tabs or “tap to read more” buttons.

- Make sure the whole DOM is not recreated once a page is fetched (it may happen if the re-hydration doesn’t work properly).

- Perform a mini SEO audit of your website. There may be many non-JS based SEO issues (that happens in the case of every website).

- Go through your server logs!

How would JS crawling/rendering affect organic performance for sites with short-lived content?

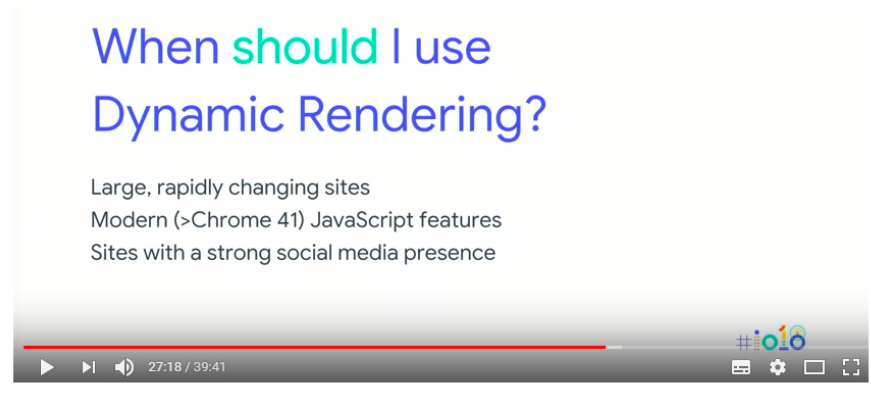

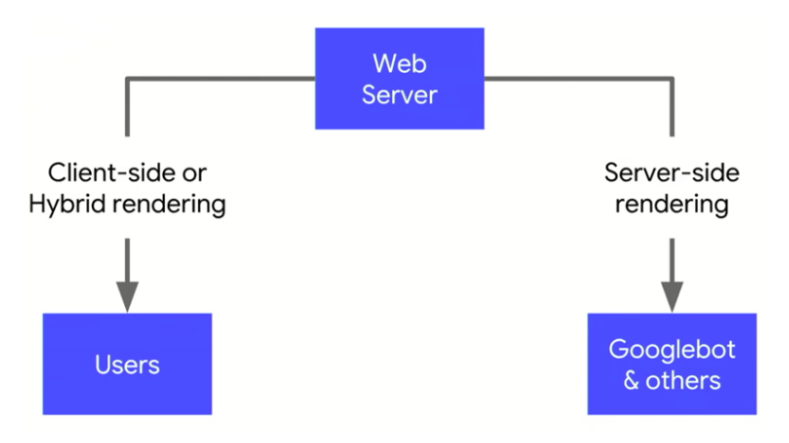

If you deal with short-lived content, I recommend implementing isomorphic JavaScript or dynamic rendering (pre-rendering for Googlebot while presenting JS content for users).

Also, I wrote an article about our crawler budget vs. JavaScript SEO experiment that explains this in-detail.

What would you recommend for pre-rendering? Pre-render.io?

It is hard for me to give you a good recommendation here. I think Prerender.io is an OK choice. If you use it, make sure you specify Chrome Headless as a rendering platform (it’s the most recent solution, and is considered much better than PhantomJS).

Pre-rendering is a complex topic as every website is different. Your key focus should be having somebody who can make sure that the implementation of the solution you choose is smooth and efficient.

How difficult is it for a developer to rebuild a client-side JS-rendered page to render only server-side? Is it as hard as rebuilding from scratch?

You mean isomorphic JS? Yes, unfortunately it’s difficult for developers to implement it. Pre-rendering is considered way less complicated from the developer’s point of view.

And not every JavaScript framework allows for isomorphic JS. For example, you can’t implement Isomorphic JS in Angular 1.

You can read more in my article How to Combine JavaScript & SEO With Isomorphic JS.

If you are delivering pre-rendered content to bots, will that still be subject to the two wave rendering process?

If you pre-render the content for Googlebot, it will receive a plain HTML website. So Google can discover your content and links in the first rendering wave.

Do you think the Rich Results and Mobile Friendly testing tools are actually executing JS? I’ve seen they don’t know the same elements as Screaming Frog’s store HTML & JS-rendered HTML

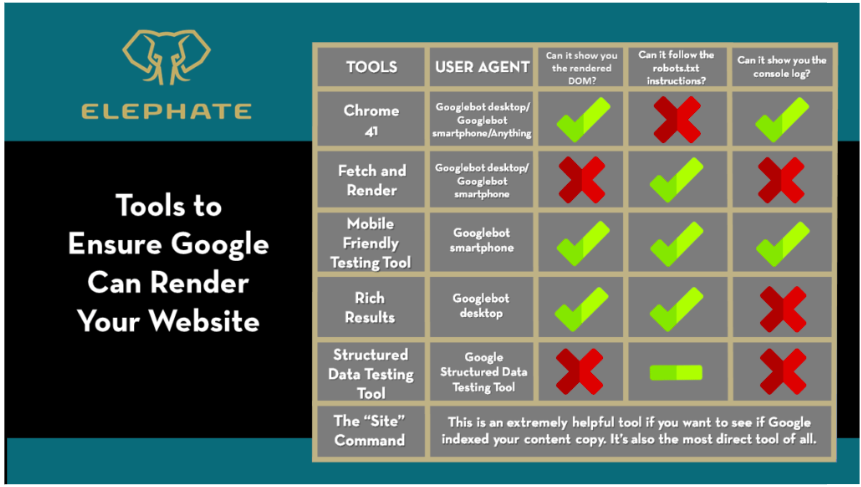

Yes, these tools do execute JS. You can learn more by looking at the table below:

The differences you encountered may be caused by the fact that Screaming Frog uses Chrome 6.x for rendering while these tools use an older solution: Chrome 41. Also, there may be different timeouts in Screaming Frog than in the case of the Rich Results Test/Mobile Friendly test.

I recently came across a site that attempts to use dynamic JavaScript ng-if robots meta tags. Is this a good idea to use this type of Meta Robots tag?

I think it should work. However, if you implement such a solution, you should make sure that the pages are INDEXABLE, by default. Then, if applicable, Angular will change the meta robots directives.

Test it using the Mobile-friendly testing tool to make sure Google can deal with it. After implementing such a solution, ensure there are no soft 404 pages indexed in Google.

P.S. Make sure you use the ng-if attribute and not ng-show. If you use ng-show, Google will get conflicting signals.

Are there any major benefits of having Isomorphic JS over Pre-rendering?

Yes, of course!

Although Isomorphic JS is more difficult to implement, it has two major advantages:

- It is the solution that satisfies both users and search engines: users can get a website faster; which is very important, especially on mobile devices. Search engines also get a website that is understandable for them.

- It is easier to maintain as you only need to deal with one version of your website. If you were using pre-rendering, you would have to deal with two versions of your website.

If you’re interested in learning more on this topic, you can read my article on the subject.

How do you deal with #! in url? Is pre-render still effective with the way Google will be crawling with the new AJAX scheme?

Let me start with the second question. Yes, pre-rendering is still an effective technique, as long as you use something called “dynamic rendering”.

John Mueller spoke about it during the Google I/O conference.

You can detect Googlebot by user agent and serve it a pre-rendered version. So if your users open example.com/products, they get a fully-featured JavaScript website, while Googlebot gets a pre-rendered snapshot. This technique is a successor of the old, deprecated Ajax crawling scheme (#! In URLs).

Regarding your first question:

Google is getting rid of the old Ajax crawling scheme (hashbangs in URLs, escaped fragments). So if you want Google to get a pre-rendered version of your website you have to make sure you are using dynamic rendering, as explained above.

In your opinion, can an ecommerce site built on a JS framework be competitive in this market? What would you recommend?

A lot of ecommerce websites use JS frameworks. By using techniques like pre-rendering/ isomorphic JS they still can be competitive in the market.

However, if you ask about the client-side rendered JS, there are not many examples of ecommerce websites being successful in Google.

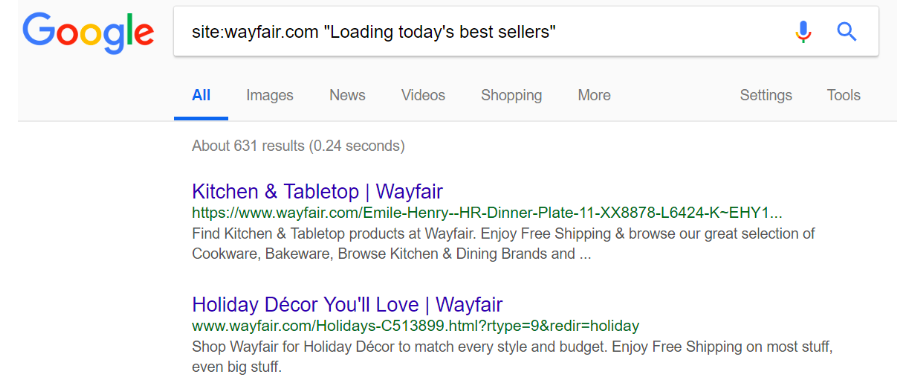

A notable example is Wayfair.com. They are performing relatively well in the search. But if you look deeper, you will easily notice they usually don’t rank for keywords related to particular products. The explanation is simple: sometimes Google can’t get product listings, because it encounters timeouts. If it can’t get product listings, it can’t get products. Then it can’t rank products high and the circle is closed.

How would you run pre-rendering on the server?

It depends on the prerendering service you want to use, but generally, your developers shouldn’t struggle with it.

Here is how to implement pre-rendering using Prerender.io.

“The Prerender.io middleware that you install on your server will check each request to see if it’s a request from a crawler. If it is a request from a crawler, the middleware will send a request to Prerender.io for the static HTML of that page. If not, the request will continue on to your normal server routes. The crawler never knows that you are using Prerender.io since the response always goes through your server.”

In the Prerender.io documentation you can find information on how to install the aforementioned Middleware for Apache, Nginx.

https://groceries.asda.com/ thoughts? Interesting to see no performance in Bing despite the presence of HTML on pages. I’d be interested to see the reasoning between how Google and Bing crawl the DOM and which is crawled first?

Yes, it’s a big issue these days. Bing doesn’t execute JavaScript at scale. So, if you have a JavaScript website, chances are great it will not be indexed in Bing.

Asda.com is a pretty popular website, many people use it in the UK (500k users daily according to Alexa.com). I can easily imagine how much money they are losing due to the fact that they are not accessible for Bing users.

The problem is even bigger in the US market where Bing handles 25% of search queries.

Can you give some insights on the impact of inline and external JS on SEO?

My experiments performed on five test websites showed Google was not following links if they were injected by an external JS file.

So if you’re creating a new JS-based website, you may fall into the same “trap”.

When we’re talking about real-life examples, a lot of websites using asynchronous JavaScript suffer from Google timeouts. If it takes too long for Google to fetch and execute a JavaScript file with, i.e. product listings, Google may get rid of it. As a result, Google will not see any links to the product pages.

Need an example? Type in Google the following command: site:https://www.wayfair.com “Loading today’s best sellers”. Looking at the massive number of Wayfair’s pages, it’s clear that Google was not able to pick up the products listings.

Want More Like This?

We hope you’ve enjoyed learning about JavaScript with one of the best in the business. If you’d like to be kept in the loop about our upcoming monthly webinars with SEO experts, simply sign up to our mailing list to be the first to find out.