Another BrightonSEO in the books, and what a great day it was. With an amazing line-up of speakers talking about some really interesting topics, as well as free beer in the DeepCrawl beer garden and a dancing Chewbacca it certainly was an informative and action-packed day.

Ever been to a conference with a dancing Chewbacca and robot? #brightonseo pic.twitter.com/VgoIhuMQ7j

— Fianna Hornby (@fiannahornby) September 13, 2019

There were so many great talks lined up it was hard to pick just one track to attend each session. If you had the same problem, we hope our recaps will be able to help you catch up on all the interesting insights shared. This recap will cover talks from Greg Gifford, Tim Soulo, Becky Simms, Chris Liversidge, Sabine Langmann, Mike Osolinski and Dixon Jones.

Greg Gifford – Beetlejuice’s Guide to Entities and the Future of SEO

Talk Summary

Greg Gifford, Vice President of Search at Wikimotive, spoke about the evolution of Google’s algorithm from simple keyword-to-keyword matching to understanding entities and real-world signals.

Key Takeaways

1.

2.

3.

What is an entity?

An entity is far more than Urban Dictionary’s definition of ‘a being or creature’ with most people thinking of an entity as a person, place, or thing. However, an entity doesn’t have to be just a person or an object, it can be a date, colour, time and even an idea. Google’s understanding of an entity is that it is ‘a thing or concept that is singular, unique, well defined and distinguishable’.

This means that entities are the most important concept in SEO and they make up the basis of every task we perform and tactic we use. In addition, SERP features such as rich snippets, answer boxes and zero-click searches are all based on entities, along with link building, reviews and local SEO.

How entity search has evolved

Google previously performed keyword to keyword matching, only looking at patterns and not the actual meaning behind the search. The introduction of the Knowledge Graph in 2012 was Google’s first step to laying the groundwork for entity search, with their announcement stating they were ‘moving from keywords towards knowledge of real-world entities and their relationships’.

Closely following this was the Hummingbird update in 2013 where Google began shifting its focus towards understanding semantics. With a patent in 2015 for ‘ranking search results based on entity metrics’ and another in 2016 for ‘question answering using entity references in unstructured data’ followed by a software update in 2018 regarding ‘related entities’, it is clear that Google is focusing on entity search. Machine learning has made this a reality for Google, with the knowledge graph used to help them learn about entities and their relationships.

What does this mean for the future of search?

Firstly, ranking will be more about real-world signals that can’t be faked, with offline actions related to business entities helping to rank websites.

Voice search will remain all about the intent of conversational queries, which will also help Google figure out which entities to serve. Mobile search will continue to evolve and make local SEO vital for everyone.

With these combined changes, SEO will become less about writing content with the right keywords and instead be more about ensuring you have the best answer based on searcher intent.

What you should stop focusing on

While links will always matter, they will become less important as Google gets better at understanding entity signals. Keyword matching and concentrating on single pages will also become less important, instead, we need to start focusing on writing content that answers questions in a unique way.

What you should focus on

In the future, Google will understand everything related to the entities of a keyword and the intent of searchers. It is therefore important to pay attention to how your entity is connected to other entities.

Local SEO will also become a key to success for many businesses, with Google My Business (GMB) a direct interface to Google’s entity base about your business. It is important to pay attention to the images featured on your businesses’ GMB page, as well as the directions, reviews, posts and the recently introduced Q&A feature.

Tim Soulo – Rethinking The Fundamentals of Keyword Research With The Insights From Big Data

Talk Summary

Tim Soulo, CMO & Product Advisor at Ahrefs spoke about the conventional practices of keyword research while sharing his three-step approach to researching and presenting both the traffic and business potential of keywords.

Key Takeaways

1.

2.

3.

Total search traffic potential

Pages will never rank for just one keyword, in fact, from research Tim and the Ahrefs team have undertaken, the average page ranking number 1 also ranks for 1,000 other keywords.

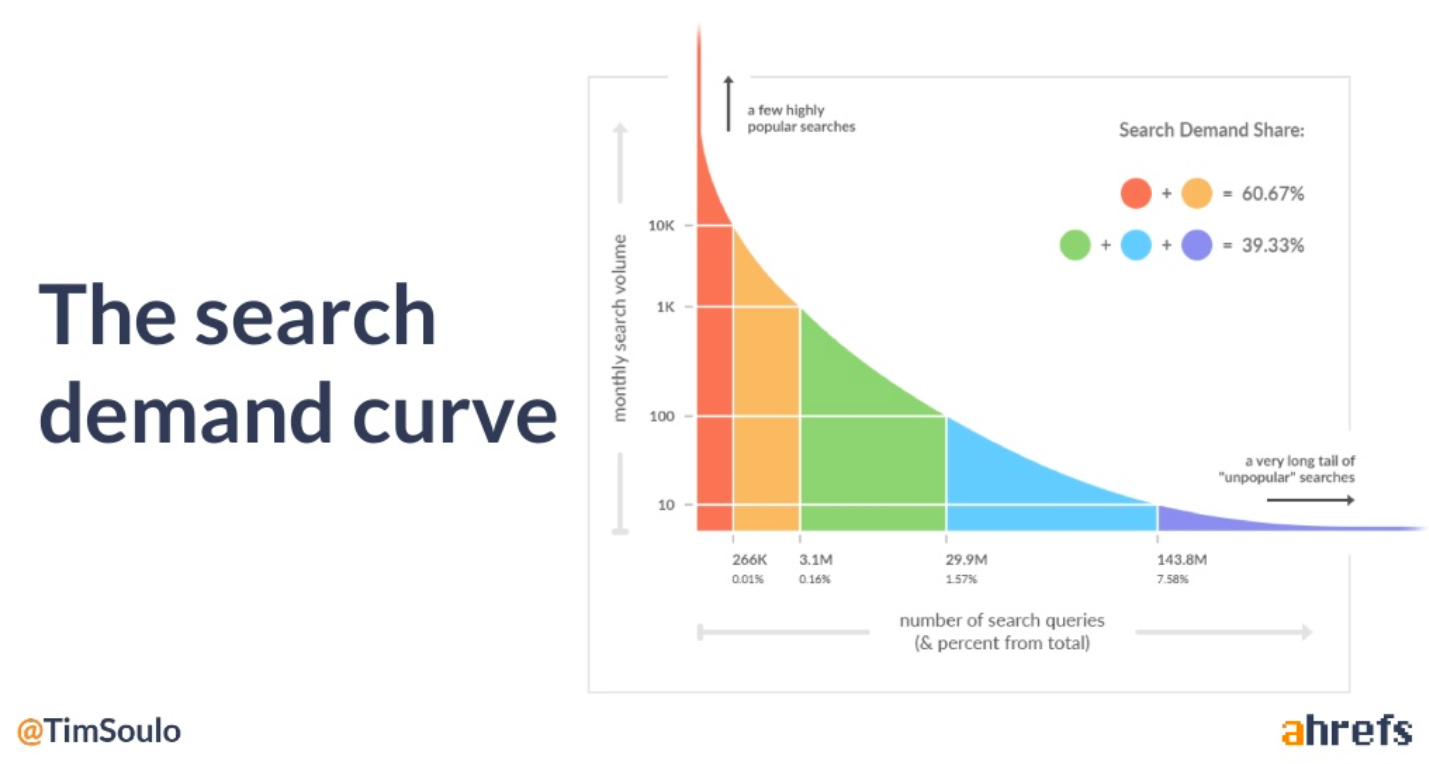

This is due to each search topic having a search demand curve of its own, with some terms seeing a huge long term curve.

The total search traffic potential you will have for each topic depends on the number of ways people search for it, as everyone searches for topics differently. In addition, Google is now able to understand keyword intent, so will also display pages for terms it deems relevant.

How to apply this

It’s important not to just look at the search volume of keywords. Analyse the top-ranking pages of the keywords you want to rank for, find how many keywords they rank for in total and pay attention to the long-tail keywords.

Business potential

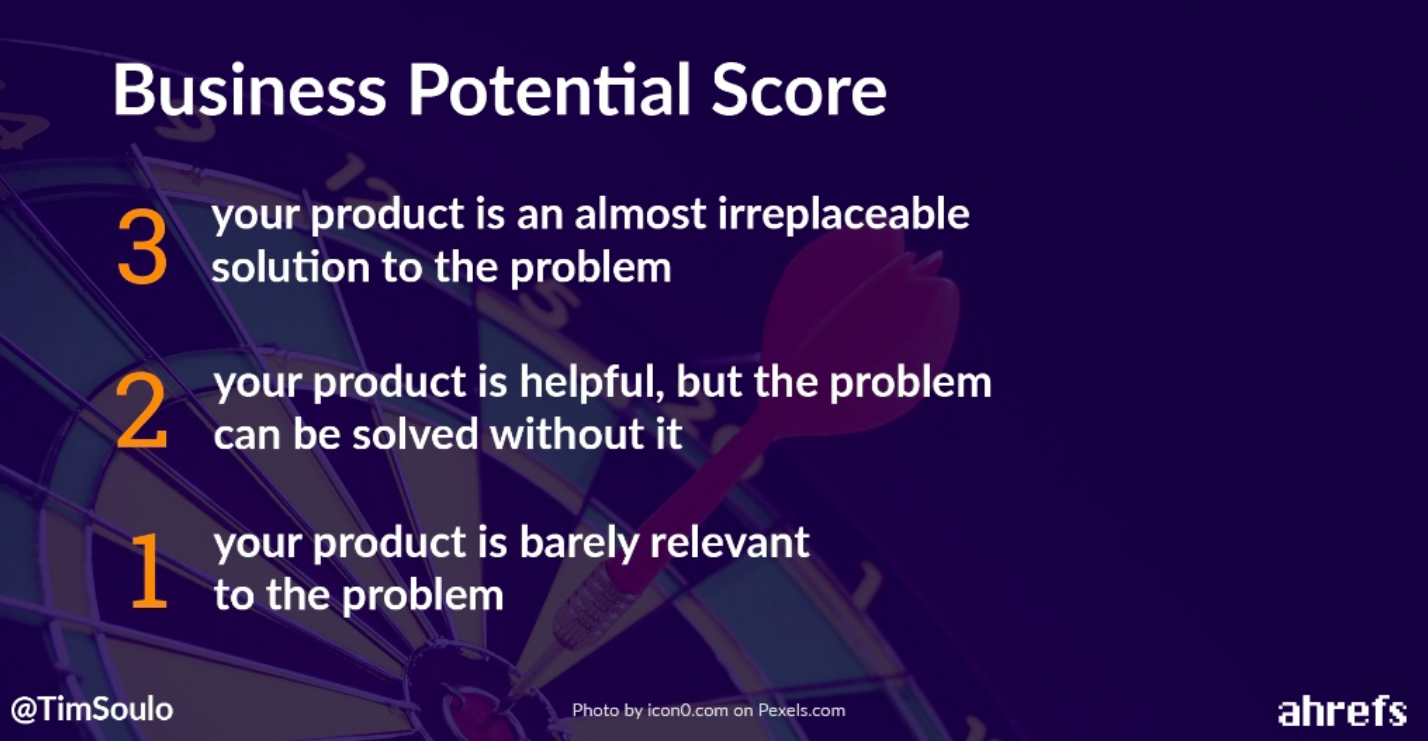

It is one thing getting traffic to your site, however, it is another making sure it is converting. Implementing a business potential score for each page on your site will help you to prioritise your keyword research activities. Tim’s business potential scoring is as follows;

3 – your product is almost irreplaceable

2 – helpful for the problem but can be solved without it

1 – barely relevant to the problem

Ranking Potential

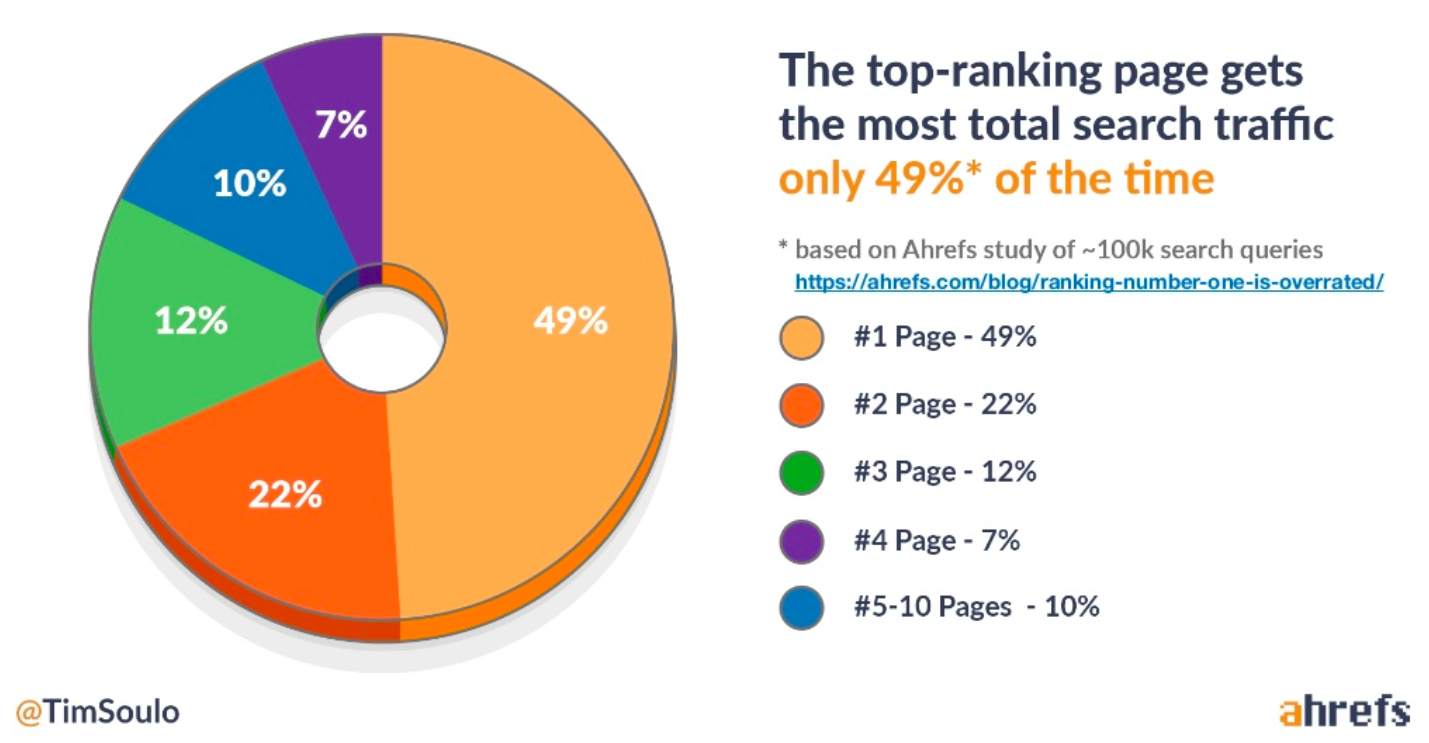

Most people’s goal is to rank number 1 on Google, particularly your boss or clients. However, Tim explained that ranking number 1 is overrated and you are not guaranteed the most visitors just because you are at the top of Google.

A study Ahrefs undertook found that the top ranking page gets the most total search traffic only 49% of the time, based on a study of 100K search queries.

How to outperform position 1

The secret to outperforming position 1 is focusing on search intent, while also covering a broad topic and achieving more backlinks.

Becky Simms – The future of search is understanding human psychology

Talk Summary

Managing director at Reflect Digital, Becky Simms, spoke about how we, as SEOs, need to focus less on bots, algorithms and SERPs and instead shift our focus to concentrate more on the humans behind the searches. You can download the slides here.

Key Takeaways

1.

2.

3.

Understanding humans will help us do SEO better

Economists have realised that people don’t always respond rationally or how we may expect to things such as value and price, while neuroscientists have discovered that all decisions we make are ‘weighed up emotionally’, even the logical ones. So without a focus on humans when writing content for our website, we are failing our audience.

Let’s talk about humans

Digital marketing metrics have become so measurable, but is this focus at the expense of content and humans we are creating this content for? Due to Google’s Rankbrain algorithm and advancements in AI, Google is able to mimic human behaviour more than ever before. This enables us to create human-first content, and talk to humans as humans, rather than writing content just for search engine bots.

Sparking action from users

To be truly good at SEO, we need to encourage action from website visitors and this begins by thinking about the human behind the search phrase. It is no secret that as SEOs we get caught up in algorithms and the constant updates to SERPs, but shifting our focus to neuro-driven content will enable to create a memorable online experience for users.

The power of language

A surprising amount of content on the web is uninspiring and dry, instead of being sticky, memorable and evoking emotion. It is also important to strike a balance between self-obsessed and self-less language, instead of just broadcasting what the product or service offers, you need to display how it can help solve the customer’s problems.

Ensuring there is a balance between static and dynamic language is key when writing content, as the context of the words included can carry a lot of power for the end-user4, this is proven by the Florida Effect.

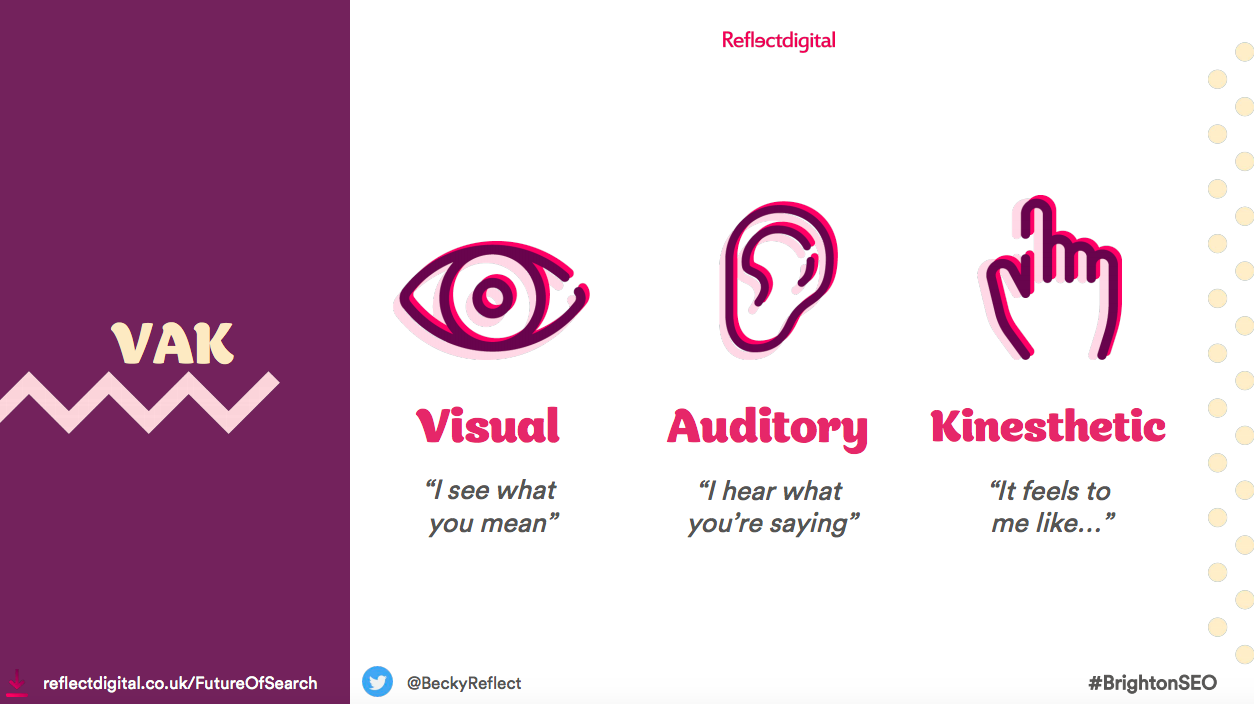

Visual, Auditory and Kinesthetic Language

Everybody has a different language bias that they prefer, and engage with more, when reading website content. For some this is visual, others prefer auditory while the rest lean more towards kinesthetic language.

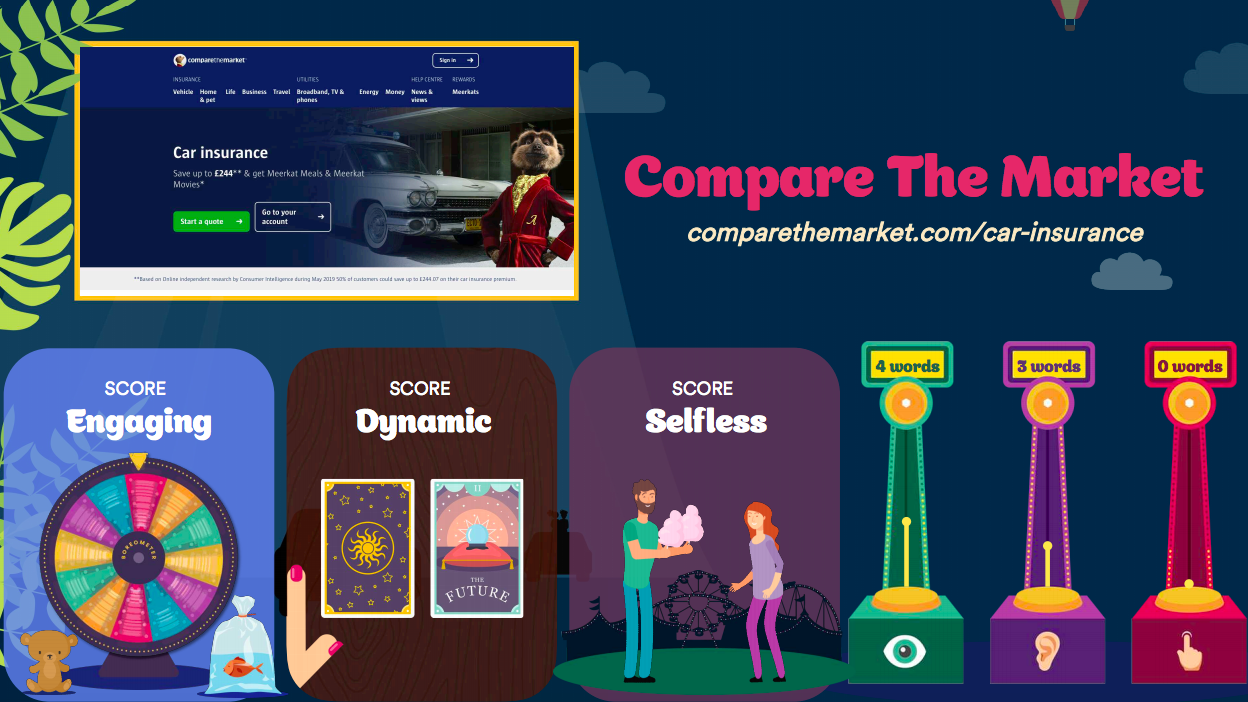

Becky also shared a new tool her and the team at Reflect Digital had been working on, called Rate My Content. This allows you to evaluate the content of a web page and generate results around how engaging the copy is, if it is static or dynamic, as well as how selflessly it is written. A score is also given to display the weighting of the visual, auditory and kinesthetic language of the content. You can check it out here.

Here are Becky’s top tips on how can we resonate more with our audience, grabbing their attention and evoking emotion.

1. Be more descriptive and fun with language.

2. Bring emotion to the content you are writing.

3. Think about your audience’s challenges and needs first, before broadcasting what you do.

4. Think about context and impact rather than just explaining facts.

5. Consider how you can use visual, auditory and kinesthetic language.

6. Ensure you strike the right balance between providing human-first content and the right signals for Google.

7. Remember, through content, you have the power to affect your users’ behaviour.

Chris Liversidge – How Machine Learning Insights Change the Game for Enterprise SEO (A 20x ROI Case Study)

Talk Summary

Chris Liverside, founder of QueryClick, spoke about unifying analytics data, the importance of attribution and how machine learning is changing the game for SEO.

Key Takeaways

1.

2.

3.

How machine learning can be used

Machine learning is used to understand connections that we as humans cannot understand, as well as generate data that isn’t available to us.

For example, by training machine learning models, SEOs will be able to join data silos using random forest to perform an infinite number of tests, until you are able to match data nearest to what you are looking for.

Machine learning for traffic attribution

Many Google Analytics accounts suffer from broken attribution, from keywords not set, to inconsistent traffic sources and location data. This is causing digital marketing and SEO approaches to be ineffective and wrongly calculated.

By using a machine learning cleansing process on one of his clients’ data, Chris was able to reveal 334% more data than the analytic program they were using. This showed that £48 million of revenue had been wrongly attributed.

Using predictive models

Predictive machine learning models can assist with a number of SEO tasks, as well as uncover useful data. For example, Chris implemented a predictive model for one of his clients which was able to analyse conversion data from their website and calculate how it is affected by offline marketing activities.

Machine learning also offers the ability to uncover trends in hard to measure markets, as well as understand cannibalisation between your paid and organic efforts.

Chris ended his talk by encouraging us to use deep data insights to rethink our marketing strategy and objectives.

Sabine Langmann – How Basic Programming Skills Can Save You a Ton of Time

Talk Summary

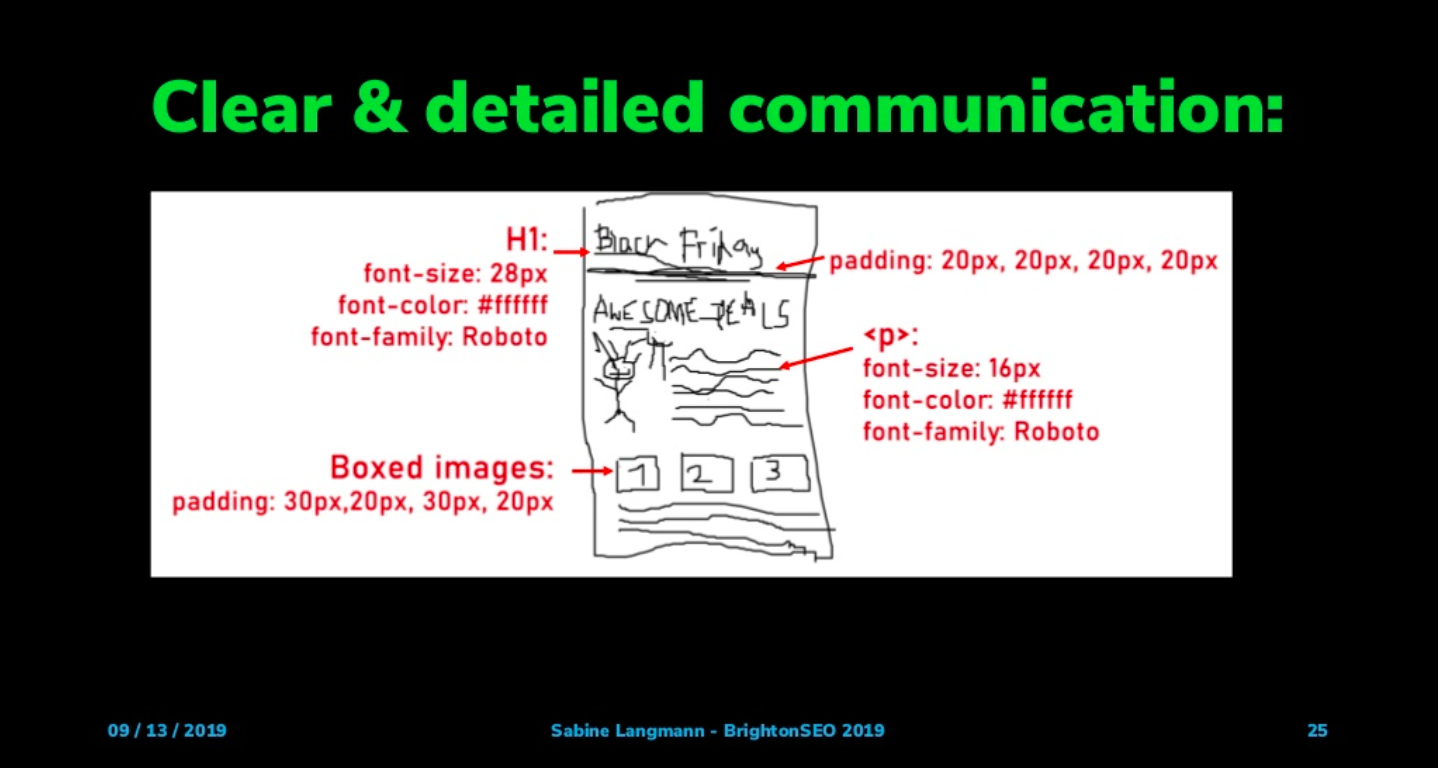

In this session, marketing consultant Sabine Langmann explained how much time can be saved if we, as SEOs, learn some basic programming skills from CSS and PHP to Python and XPath.

Which framework for which task?

CSS

CSS (Cascading Style Sheets) is used to take care of your website’s styling and design. Having a basic understanding of this language will enable you to communicate better with your developers to create more appealing and converting pages on your website.

From her research, Sabine estimates the one-time investment of learning CSS is around 10 hours, but this will save you 3 hours a week.

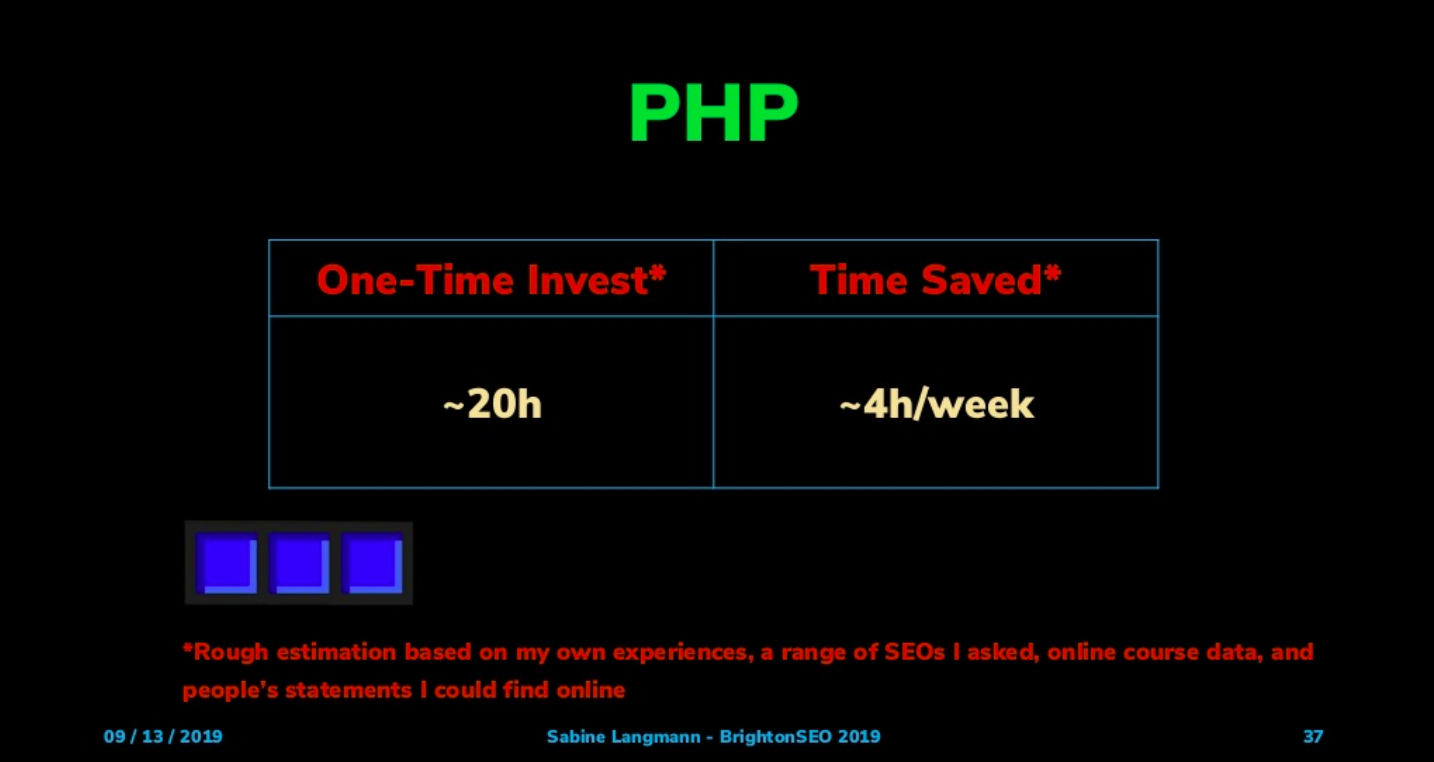

PHP

PHP is a server-side scripting language and interpreter which allows you to find the code elements on your website a lot quicker. Learning PHP will provide you with the knowledge to customise key parts of yours or your client’s website.

The one-time investment for learning PHP is on average 20 hours but can provide a time saving of 4 hours per week.

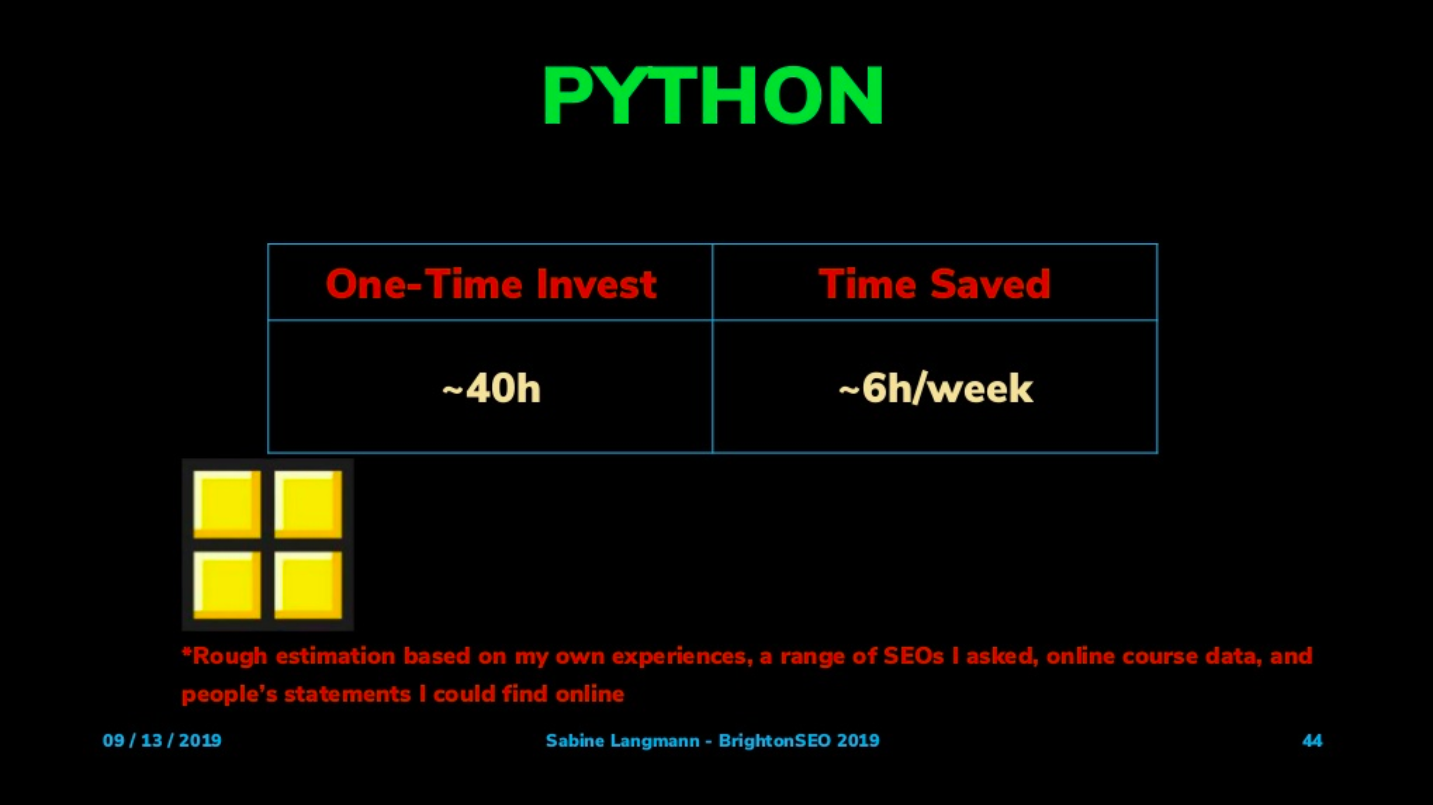

Python

Python is a programming language which enables data extraction, analysis and visualization, while also powering machine learning models. The power of python provides the ability to automate regular website checks and analyse SEO issues, without the need for excel.

While there is a little more time investment needed to learn Python, of around 40 hours, you will be able to save, on average, 6 hours a week with a working knowledge of the language.

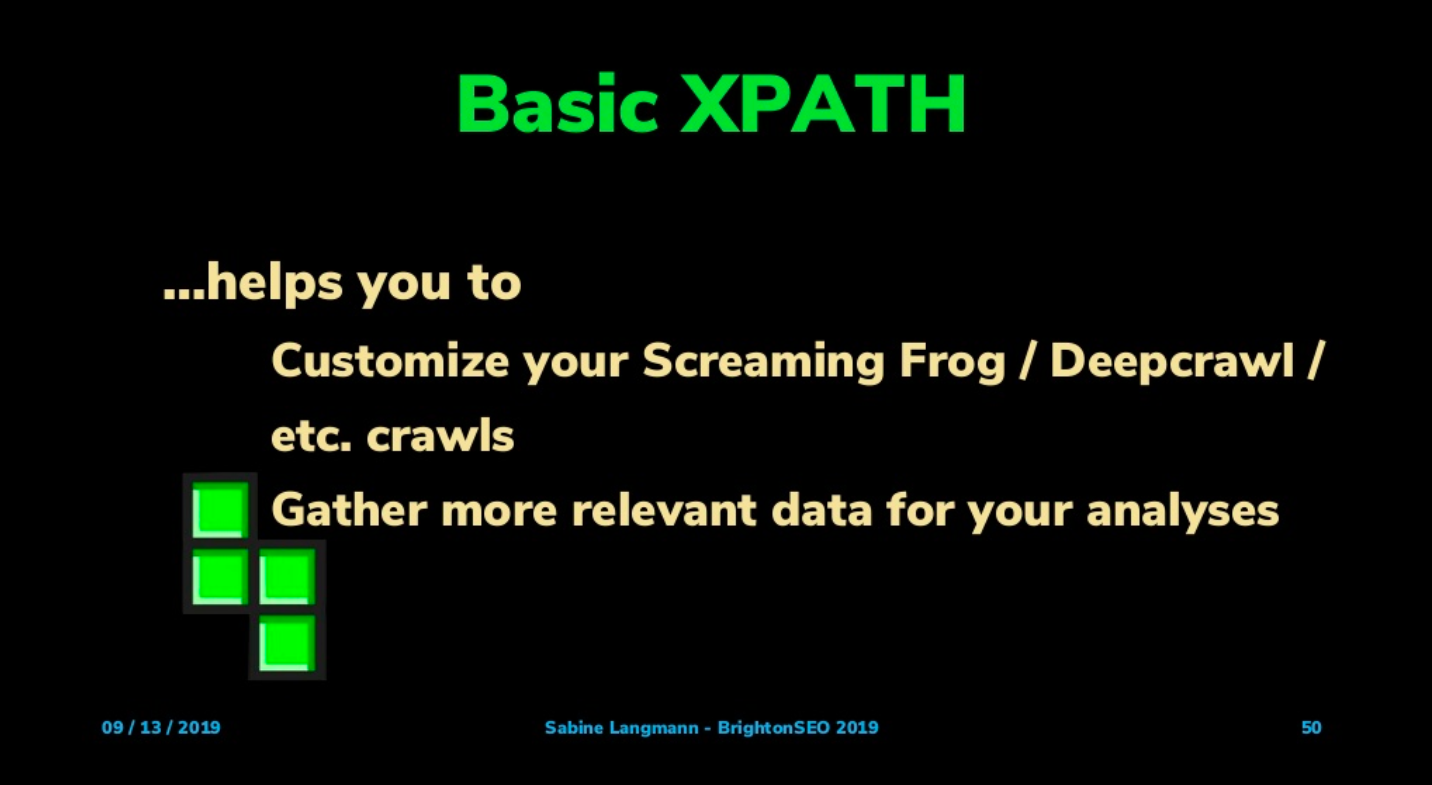

XPath

XPath is a Query language used to select nodes from an XML document. Basic XPath knowledge will allow you to scrape certain elements on a page by customising your site crawls and gather more relevant data for your analysis.

With a one-time investment of just 5 hours, XPath knowledge will save you an average of 2 hours per week.

Sabine concluded her session by sharing her ‘Tech Swag’ which consists of tech knowledge = more confidence = more clear communication = more credibility = more motivation = increased happiness.

Mike Osolinski – Utilizing the power of PowerShell for SEO

Talk Summary

Mike Osolinski, a freelance technical SEO consultant, explored the command-line tool Powershell, explaining how it can be used to perform SEO tasks including website scraping, connecting API’s and manipulating data.

Key Takeaways

Why should you automate SEO processes

There are a number of benefits to automating SEO processes using the free tools available to us. Spending less time gathering and formatting data provides more time to focus on the analysis and recommendations of your audit. Automating tasks will also make your business more profitable and enable you to do the same amount of work but in a shorter amount of time. It will also allow teams to handle greater workloads, while ultimately providing SEOs with an easier life.

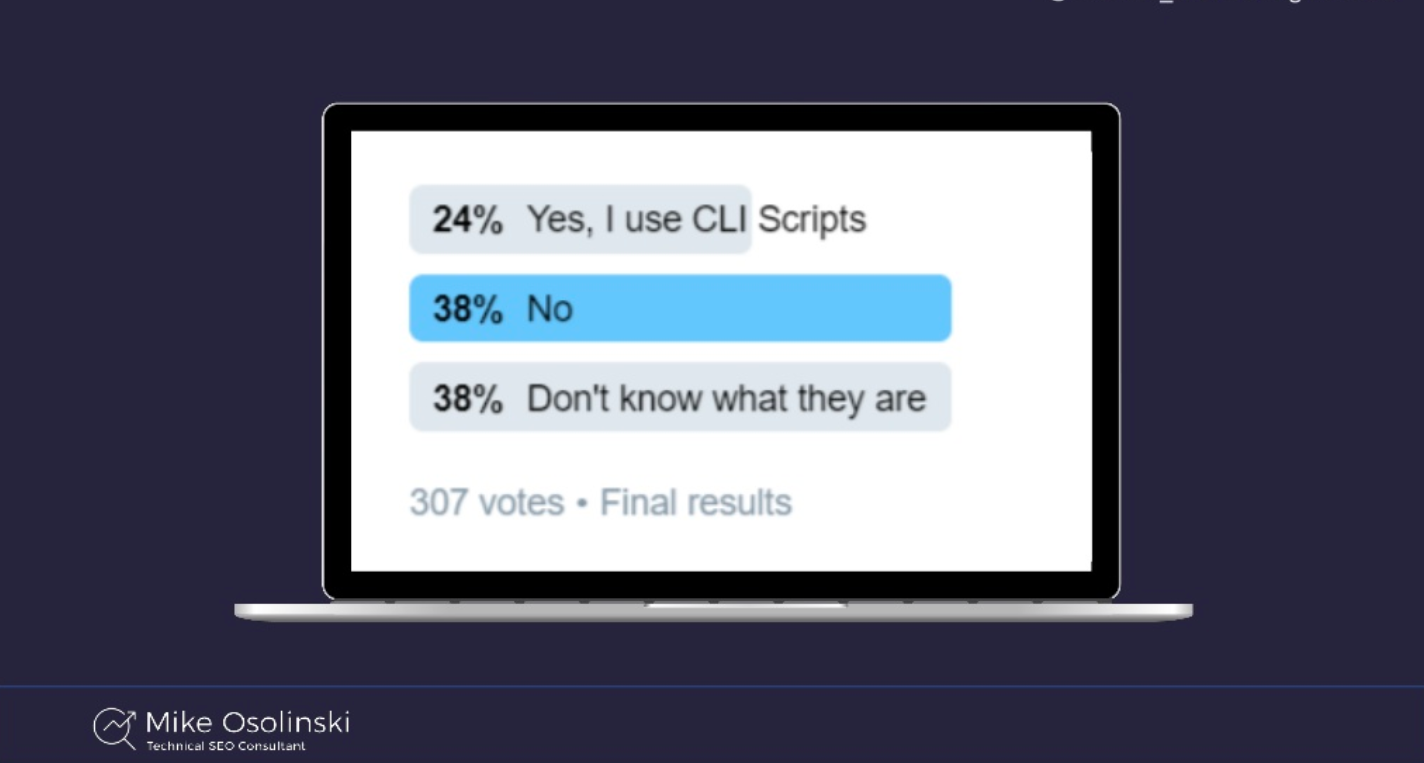

Why don’t more people automate processes?

A lot of the time people will not automate their processes due to a fear that they will break something or that it is too hard and time-consuming to learn and implement. There is also a lack of awareness around the potential time savings of automating repetitive tasks.

What is Powershell?

Powershell is a task-based command-line shell and scripting language built on .NET which help users rapidly automate tasks that manage operating systems and processes. Or, as Mike says, it gives you superpowers.

Powershell is already installed on most Windows computers and is an open-source, cross-platform tool with a large community. Essentially, it makes working with data easy and reduces the need to write complex formulas and expressions.

Why should SEOs care?

There are a number of SEO tasks you can automate with Powershell, including

- Scraping websites

- Automating website interactions

- Running crawl reports

- Extracting and manipulating data

Cmdlets examples

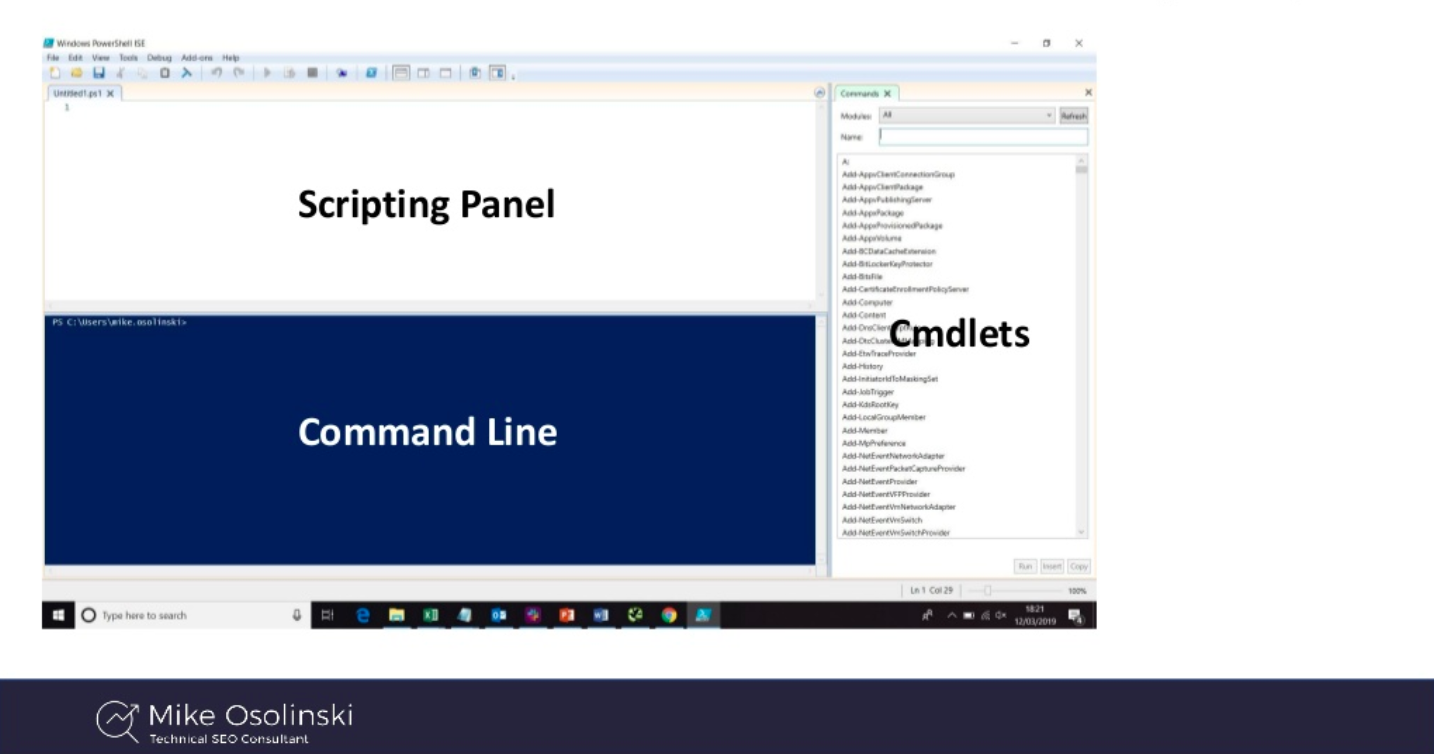

When opening the Powershell you will see the Scripting Panel, Command-Line and Cmdlets, which are lightweight scripts that perform a single function.

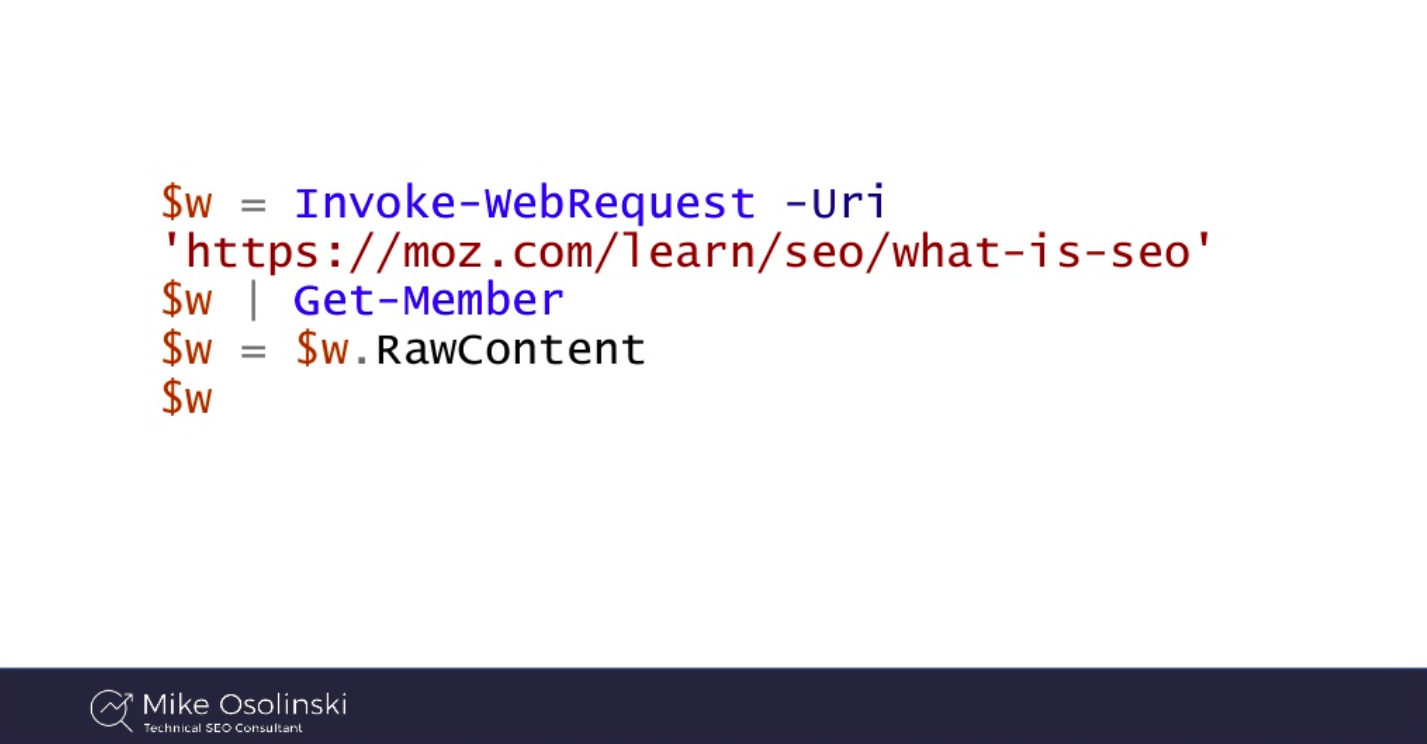

Invoke-WebRequest

This cmdlet sends requests to a web page or service which parses the response and returns collections of all HTML elements and responses.

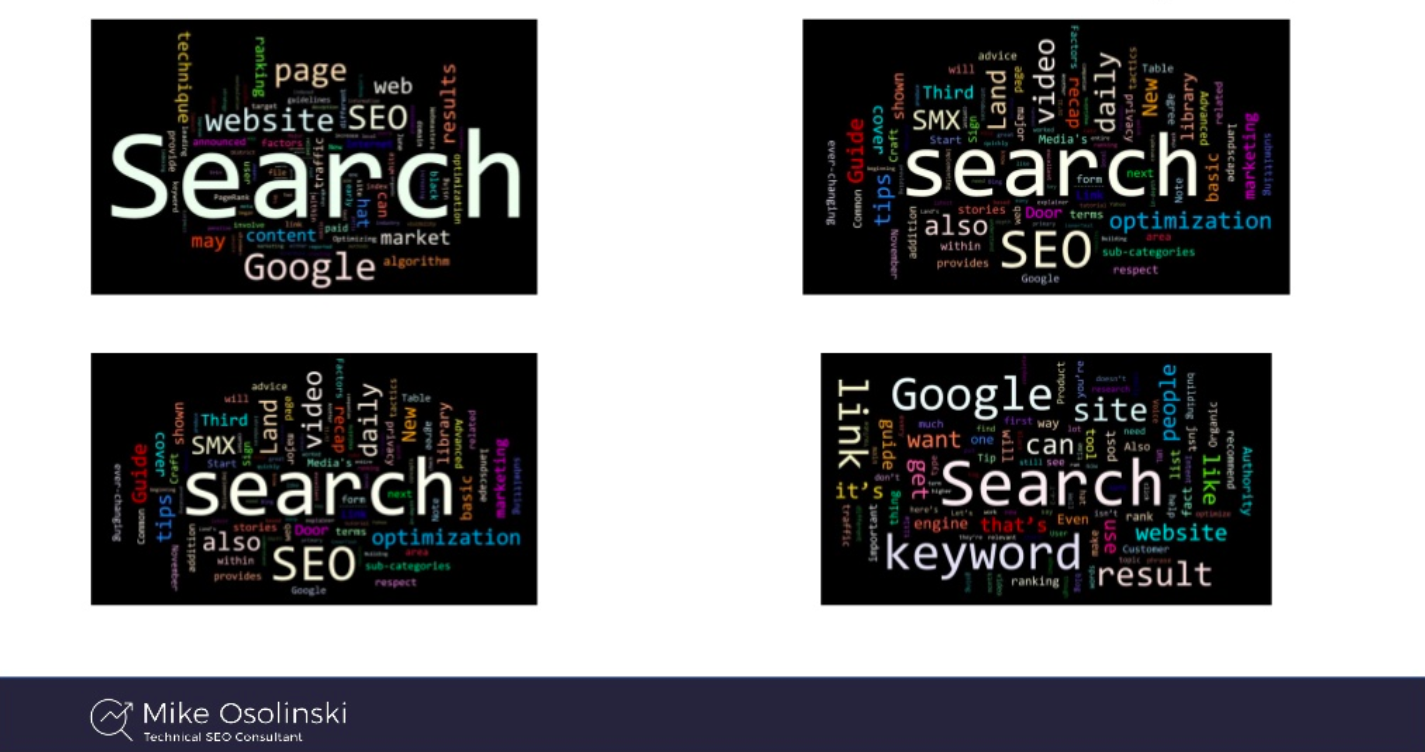

Powershell turns everything into objects which provides simple access to images, links, content and forms that have been returned. This allows you to export data into a CSV file and even create word clouds to visualise text on the page.

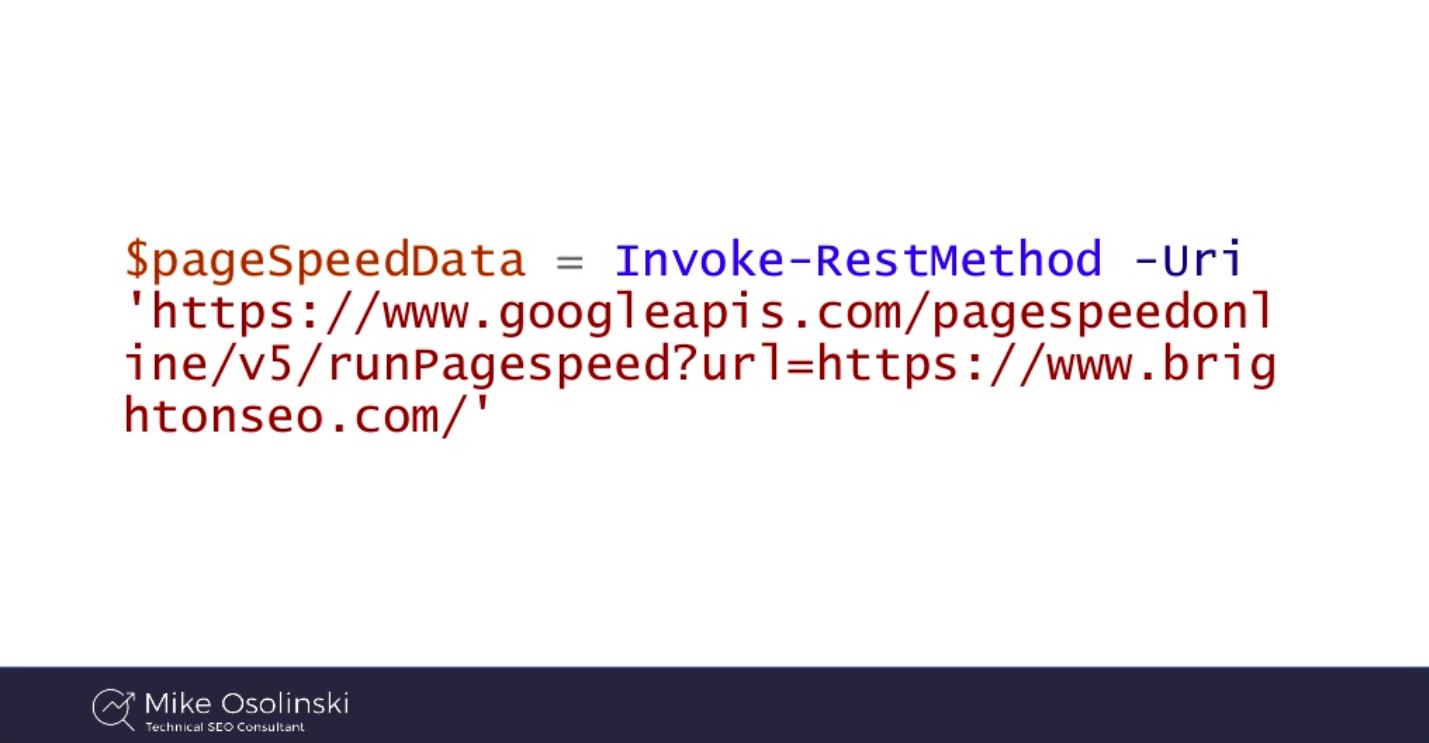

Invoke-RestMethod

Similar to Invoke-WebRequest, this cmdlet parses responses and returns collections of HTML elements. An example use case of this is incorporating the PageSpeed API and looking at individual areas of the audit.

Once again, this can be exported into a CSV file, enabling easy analysis of data. Powershell also works with any request API.

As an incredibly versatile tool, Powershell is not just for system and network administrators and it’s important to understand the automation opportunities possible with it.

And remember:

Dixon Jones – How PageRank Really Works

Talk summary

Majestic’s Global Brand Ambassador, Dixon Jones, explained Google’s PageRank algorithm and broke down the formula with an overview of his observations and insights into the maths behind it.

Key Takeaways

1.

2.

3.

What is PageRank?

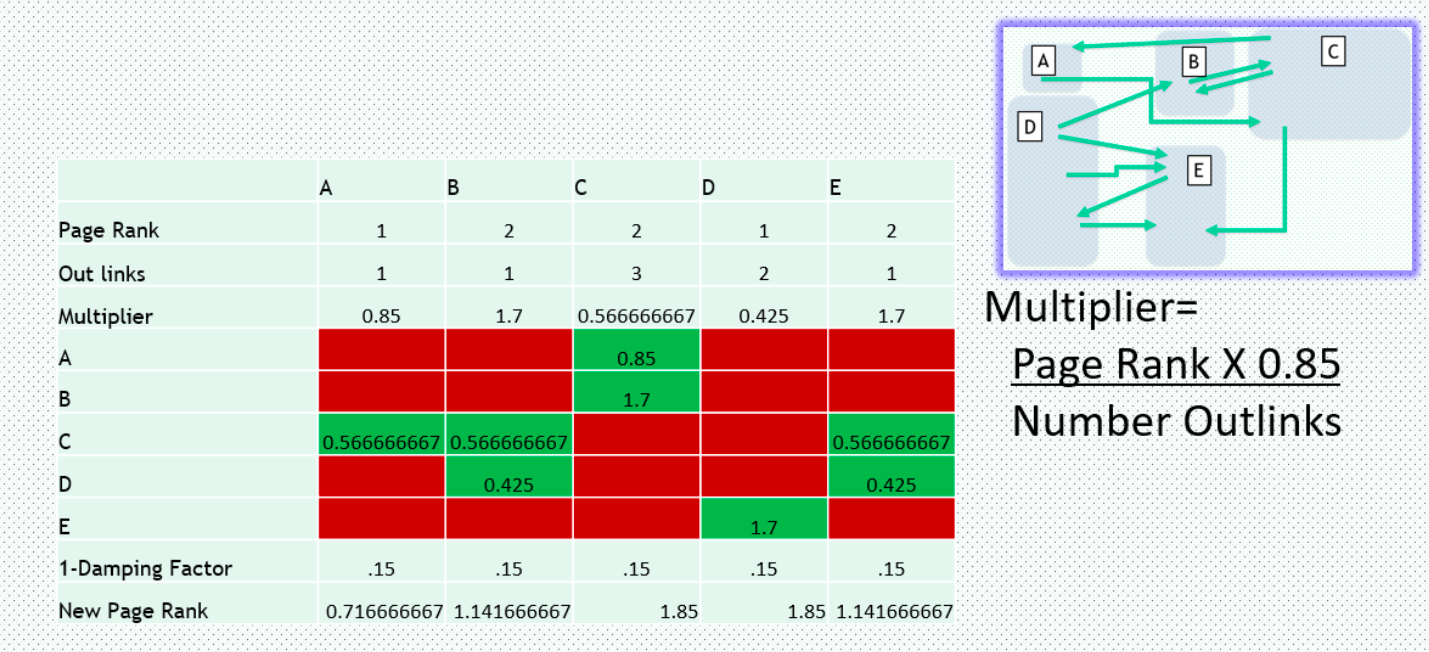

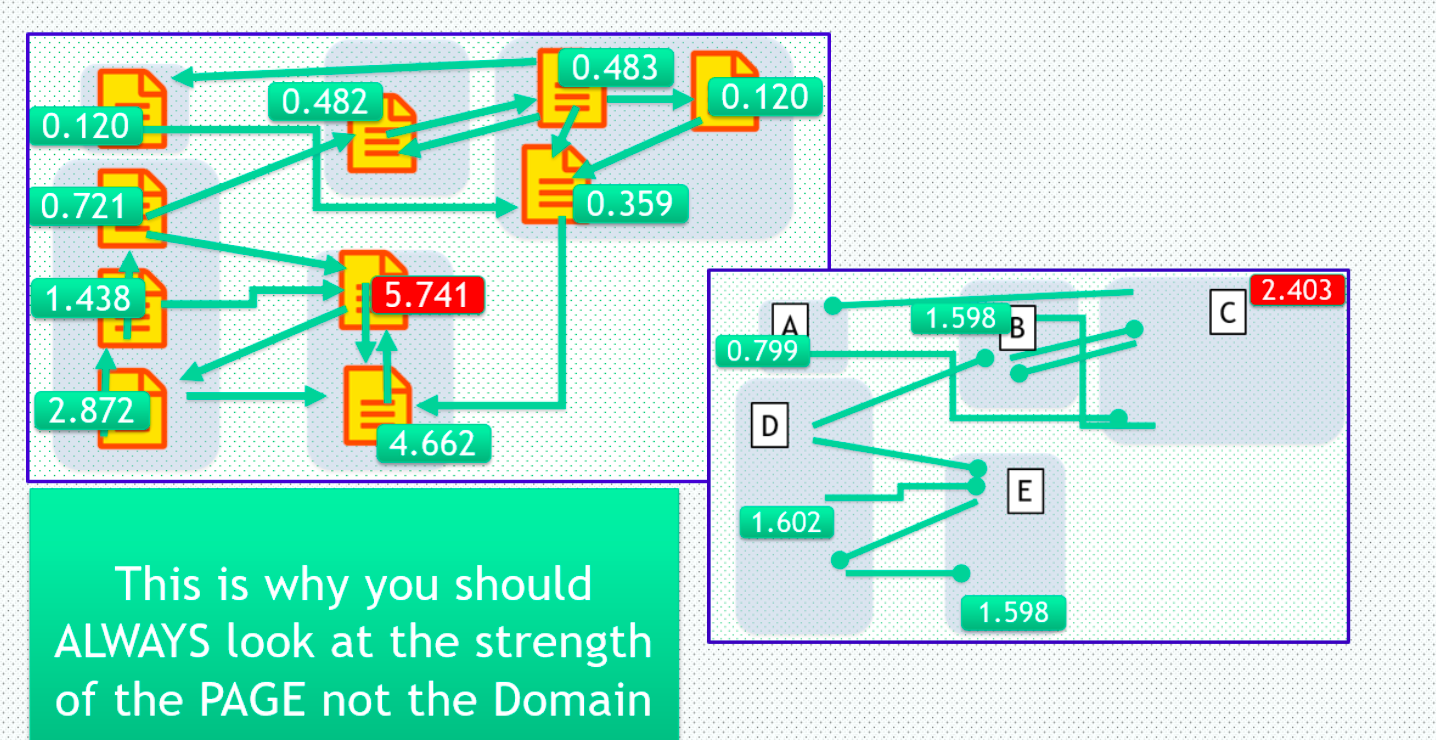

PageRank is an early version of machine learning and is used to calculate how many backlinks are going into a page and how many links are going out. It will also look at the relationship between other pages on your site linking to a certain page.

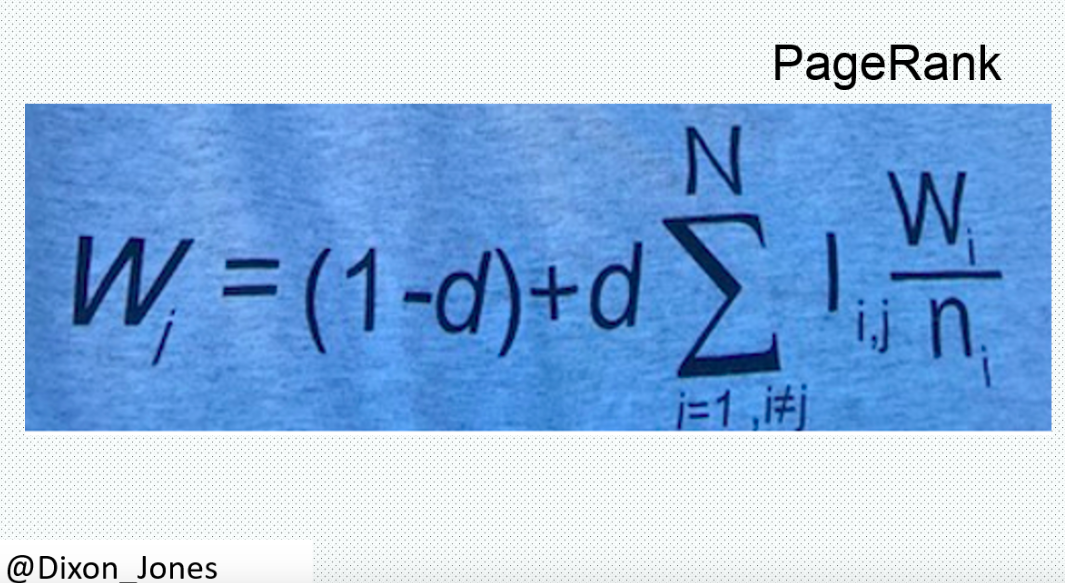

The PageRank calculation

Essentially, PageRank calculates the number of backlinks going into a page, as well as taking into consideration how many links are going out of the page. In the example Dixon shared the original PageRank was the number of backlinks pointing to a page, from here he calculated the multiplier for each page. This is found by multiplying PageRank by 0.85 divided by the number of outlinks. A blanket damping factor of .15 is then applied to the calculation.

Dixon has a handy excel document with the PageRank formula set up, you can gain access to this by emailing Dixon at talk@dixonjones.com with PageRank as the subject.

The Results

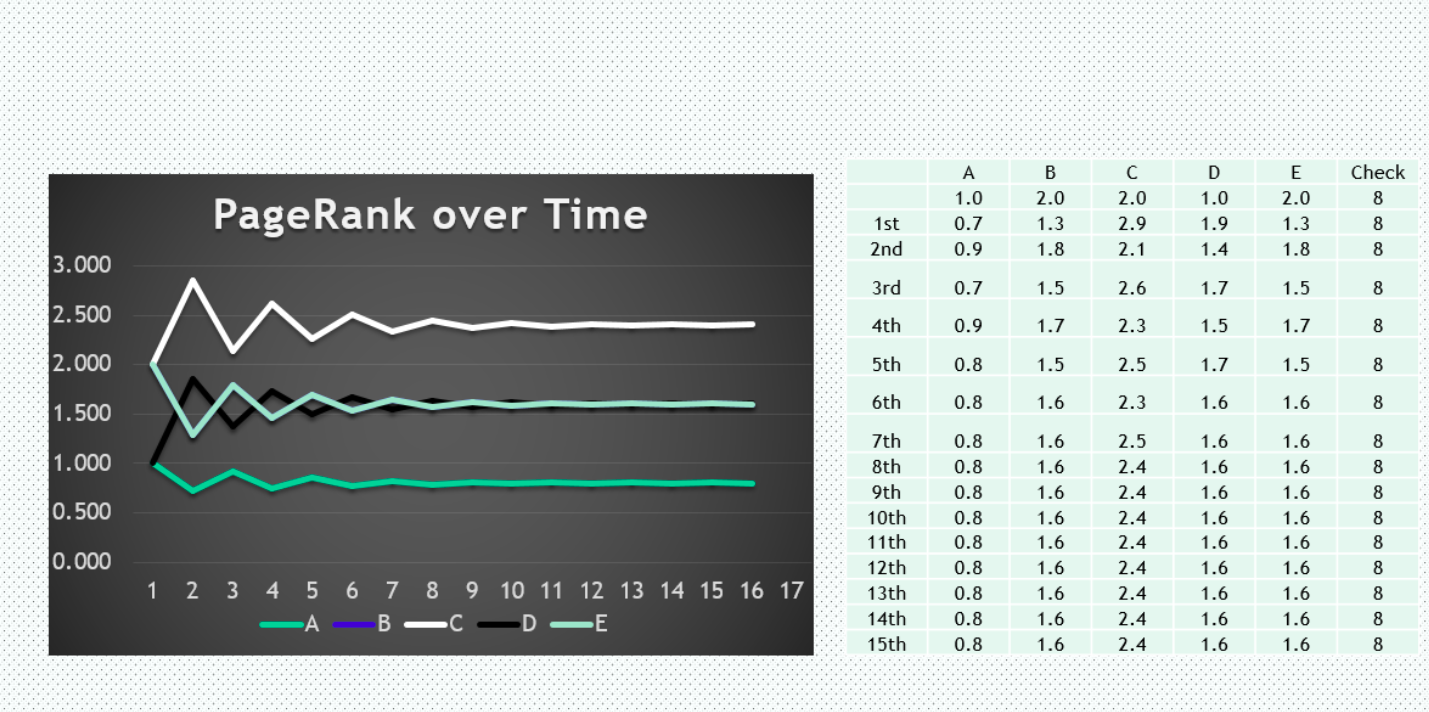

PageRank works best with a universal data set as a number of iterations should be made during the calculation. This is due to the following:

- Every signal is small

- Individually it is prone to error or opinion

- At scale errors decrease

- Confidence increases

When calculating PageRank, the results will fluctuate through each iteration, before settling to become a more consistent outcome.

Working at a page level

PageRank was designed to work on a per-page basis and will change dramatically when calculating at page level, as opposed to domain level. This is because you need to get granular in order to calculate PageRank, so it should never be done at domain level. PageRank calculates the strength of a single page, not the whole domain.

Updates to PageRank

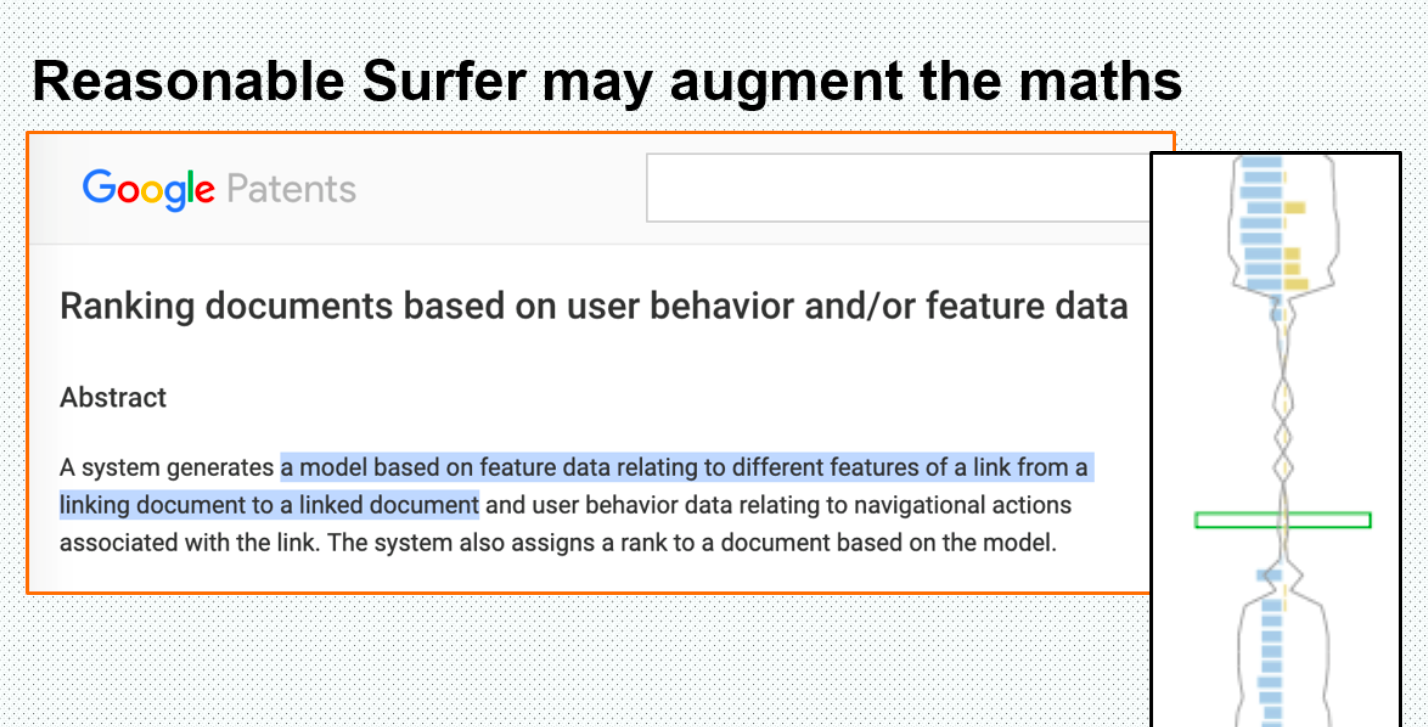

In 2006 Google amended the PageRank algorithm to give approximately similar results to those previously generated, but in a faster way to compute.

Google’s reasonable surfer method may also be used to augment the maths. This is a model based on feature data to different link and evaluates user behaviour data relating to navigational actions associated with the link. It lays on top of the PageRank model, and reinforces the philosophy that not all links are treated equally.

The BrightonSEO September 2019 Event Recap Continues

As there were so many great talks during BrightonSEO we couldn’t cover them all in one recap post. You can find part 2 of our BrightonSEO April 2019 event recap here and part 3 here.