JavaScript is one of the most talked about but perhaps least understood aspects of SEO today. With all of the different frameworks out there and confusion about how users and search engines actually experience JavaScript-powered websites, we brought in CEO and Head of SEO at Elephate, Bartosz Goralewicz, to talk to our Chief Growth Officer, Jon Myers, in order to try and clear things up.

When it came to the task of explaining JavaScript, we knew that Bartosz was the man for the job. During the webinar Jon even referred to Bartosz as “Mr. JS” due to his coverage of the topic at events all over the world, so he certainly knows his stuff.

Thanks to Bartosz and Jon for their time and treating us to an exceptional webinar, and thank you to everyone who tuned in to listen! We’ve put together a recap for you, including the webinar recording, slides and notes so you won’t have to miss any of the great insights that were shared.

Awesome @deepcrawl webinar by @bart_goralewicz Quite enjoy geeking out over JavaScript.

— Shane (@shanejones) May 30, 2018

Here’s the full recording of the webinar in case you missed it live.

You can also take a look at Bartosz’s slides if you wanted to revisit any of the points covered.

The key question we wanted to answer, was “how are the search engines setting themselves up to deal with JavaScript, and how do SEOs embrace it, too?”

If you want to find out about how you can optimise sites that use JavaScript, then look no further. We’ve launched our JavaScript Rendered Crawling feature that will give you the full picture when crawling sites that rely on JavaScript.

SEO has never been so dynamic

JavaScript is becoming more and more prevalent on the modern web, which Bartosz has noticed much more within the last year or so.

There have been many announcements in the SEO space recently about how crawlers are changing and adapting to suit the evolving nature of websites. There is so much more transparency now, which creates a really dynamic industry. Google, for example, is pushing out more changes than ever and is actually telling us about them.

One of the things that will remain a constant though, is JavaScript. More and more websites now are JavaScript-based, and SEO has moved away from plain HTML. As Bartosz said, it’s here to stay, and JavaScript SEO is not an elusive, “geeky option” anymore. We all need to get better at understanding it because that’s the way the web is going.

The web has moved from plain HTML – as an SEO you can embrace that. Learn from JS devs & share SEO knowledge with them. JS’s not going away.

— John ☆.o(≧▽≦)o.☆ (@JohnMu) August 8, 2017

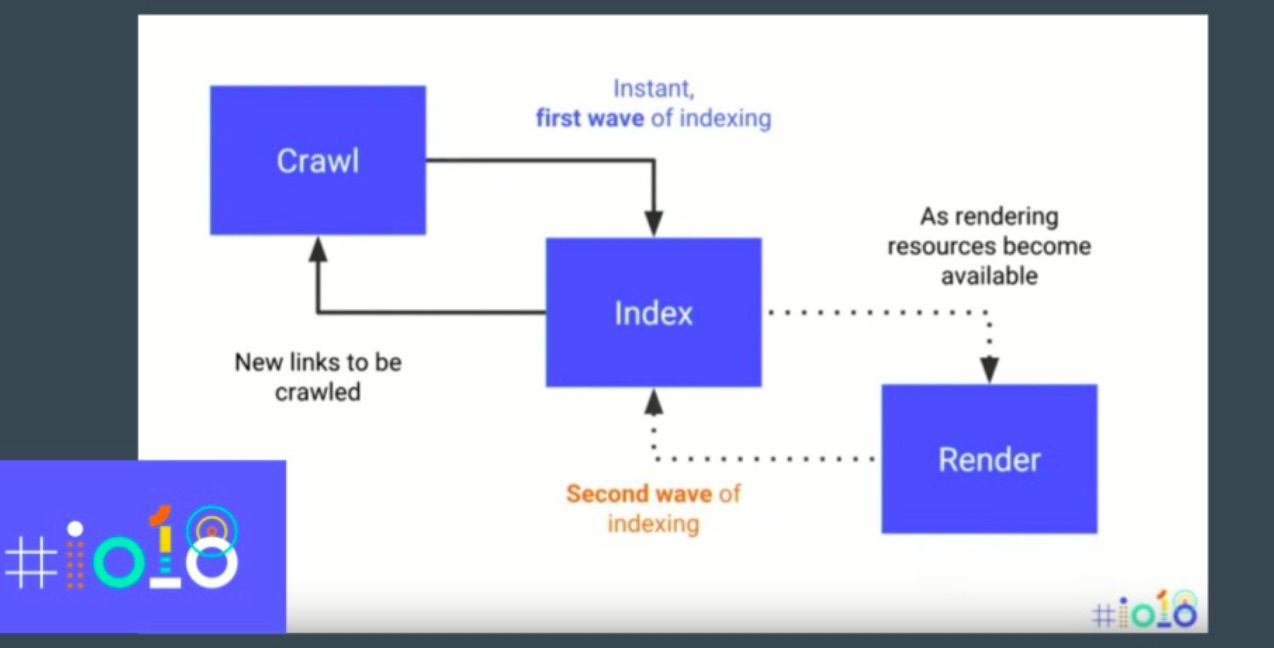

Google’s 2 waves of indexing

As we recently heard in the JavaScript session at Google I/O 2018, John Mueller and Tom Greenaway gave some clarity on how the search engine indexes JavaScript. We learned that there are two waves of indexing, and this is how they work:

- In the first wave, HTML and CSS will be crawled and indexed, almost instantly.

- In the second wave, Google will come back to render and index JavaScript-generated data, which can take from a few hours to over a week.

The two waves are needed because after the first wave, Google needs to wait until it has the resources to be able to process JavaScript. This means that rendering is deferred.

Google is so powerful that the whole process, including the second wave, should be instant, right? Wrong. That’s not how things work at the moment, because crawling JavaScript is very expensive. This is because the process takes:

- Time

- Processor power

- Computational resources

- Memory

Another reason for the expense is that JavaScript “lives” in your CPU, and this requires a lot of processor power, no matter what device is being used, its battery power etc.

One of the reasons, is #JavaScript is extremely resource heavy (including #CPU power), which @bart_goralewicz likens to a gas guzzler, unlike a Prius! Similarly, it also costs a lot more pic.twitter.com/nd9aZaxvsl

— DeepCrawl (@DeepCrawl) May 30, 2018

To get an idea of the overall cost for search engines, all of these elements needs to be scaled across the entire web! Even Google isn’t infinite…

Some case study learnings

Bartosz provided us with a few case studies featuring some of the biggest JavaScript-powered websites out there today. He looked at Netflix, YouTube, USA TODAY, The Guardian, AccuWeather, Vimeo and Hulu. Here are the key things we learned from these sites:

- The CPU load for USA TODAY’s EU Experience site was almost 0, and that was because all of its JavaScript had been stripped down from the main site.

- Page speed tool results may look bad for The Guardian, but visually for users it appears to load much quicker than competitors because it defers JavaScript loading. However, performance comes with a price, and that’s paid with CPU (which you can see in ChromeDev Tools.)

- For AccuWeather, there is a 13s difference in Time To First Meaningful Paint when comparing a high-end desktop CPU and a median mobile device.

- Netflix decided to remove React.js from the front-end but keep it on the back-end. To combat visual regression they instead replaced React with plain JS code and saw a 50% performance increase. This is because they don’t depend on client CPU now (including for Googlebot.)

- Hulu is a JavaScript-powered website and often loses rankings to other sites. This is because a lot of its content isn’t visible to search engines or users with JS disabled.

- Vimeo has also seen visibility decreases because pre-rendering isn’t in use and content is blocked meaning nothing is shown when viewing the site without JavaScript enabled.

- YouTube has managed to reverse traffic decline because it pre-renders for Googlebot. These positive results suggest this is best practice.

There are massive differences in loading times on sites, for example between @guardian and @netflix, as the latter invested a lot and reduced #reactJS and saw massive speed increases! pic.twitter.com/QnqGyKveo4

— DeepCrawl (@DeepCrawl) May 30, 2018

How JavaScript impacts SEO

There are many ways in which JavaScript impacts SEO, but Bartosz covered four of the main areas: indexing, crawl budget, ranking and mobile-first.

Indexing

In Bartosz’s words, JavaScript indexing is “shaky.” For example, the Google team working on Angular saw everything any level deeper than one directory get deindexed. Perhaps Google was saving resources by only looking at the top level URLs.

We know that there are two waves of indexing, but we also need to consider that a rendering queue builds up for the second wave. A good place to do some research on the speed of indexing for the second wave is to see what impacts the rendering queue and gets JavaScript-powered sites rendered faster. Bartosz suspects that a site’s relevance and importance are some of the ways you can move up in the queue. Get testing, SEO community…

Another thing to bear in mind is that JavaScript can cause content to be indexed under the wrong URL or on duplicate URLs.

Crawl Budget

Search engines need to be able to crawl your site quickly in order to give you a larger crawl budget, and crawling JavaScript is slower than crawling static HTML. Having a lot of resource-intensive pages for Google to get through will mean your pages won’t be crawled as quickly or frequently.

Ranking

Even if Google can index your content in the first place, it can be problematic to actually see sites which are heavily reliant on JavaScript ranking well. Bartosz has found few JavaScript-powered websites ranking, especially when using client-side rendering. Pre-rendering is needed to get around this.

Mobile-first

Considering mobile-first indexing, do mobile sites have less JavaScript? The answer is no, so this is something to bear in mind when carrying out any mobile-first checks. Mobile sites and configurations also have a lot of JavaScript which still needs to be processed by Googlebot Smartphone.

Thinking back to the learnings from the AccuWeather case study, mobile is the most popular device but isn’t necessarily the best performing. There are vast differences in processing power between a median mobile device and a high-end desktop device. This will affect performance experience for your users.

The endless (and chaotic) possibilities of JavaScript

JavaScript crawling isn’t black and white, and there are so many aspects that can affect its price. When conducting their own research, Elephate saw search engines crawling differently depending on the placement of JavaScript (e.g. inline or external.)

There are so many different frameworks out there which can be configured in so many different ways. All of this adds up to complete chaos for the search engines and how they can render and index websites.

Remembering that there are also over 130 trillion documents on the web and 20 billion pages crawled per day, there’s no wonder that JavaScript is so resource-intensive!

Embracing the chaos of JavaScript

Instead of running from it, we need to start embracing the chaos of JavaScript, especially with all the new frameworks out there now. In order to do this you need to:

- Ensure sure you’re not caught between the two waves of indexing – ride the first wave by using server-side or hybrid rendering and reduce reliance on client-side rendering where possible.

- Make the SEO team the glue that holds your website and your company’s departments together – gather knowledge from the developer and framework creator sides to help everyone else understand what’s going on.

It may sound daunting that JavaScript can cause such a mess for search engines and performance, but here are some positives to take away:

- Google is continually getting better at rendering and indexing JavaScript-powered sites. The search engine plans to make rendering a more instant process in the future with a more modern version of Chrome.

- Framework creators have backed this up by saying that server-side rendering is important for SEO, but search engines are also getting better at rendering JavaScript.

Make sure you’re riding the first wave and not the second wave, otherwise #Google won’t find everything in order to index it. Also, consider hybrid rendering (or even server-side) over client-side for #JavaScript –@bart_goralewicz #SEO pic.twitter.com/9dA0Djj3Zd

— DeepCrawl (@DeepCrawl) May 30, 2018

Final thoughts and tips from Bartosz

- Pre-rendering is better for SEO but it is extremely expensive. It requires a lot of computing power and developer knowledge.

- Use Google’s mobile-friendly test to see the results of how the search engine is processing your HTML and JavaScript, as well as seeing page loading issues.

- As a bit of homework, try out Diffchecker to see the differences in content caused by JavaScript

SMASHED IT!

Managed to get on via my mobile in the end.

Sooo much incredible info. First thing tomorrow I kick off a convo with the dev team to have our links contained in accordions JS free for googlebot/bingbot.

— Witty Black Guy (@MrLukeCarthy) May 30, 2018

So there you have it; hopefully you gained some useful insights to take away to your own companies, and that you now have a deeper understanding of how JavaScript works.

Our next webinar: Site speed

If you enjoyed this webinar, then you’ll love the next one we have planned. Jon’s next webinar guest is Jon Henshaw, and they’ll be discussing the topic of site speed and performance. It’s set to be a really insightful session, so be sure to book your place now.