What makes backlinks so important in your crawl?

Backlinks are links from other websites to yours: they bring users, PageRank, and link equity to your website which can trickle through your website’s architecture.

We regularly see cases where a website has attracted great links from other websites, however over the years these websites change. Products get discontinued, platforms are migrated, we all move to more secure URLs, and backlinks are forgotten. This is a big problem for many websites: for some, a large portion of their authority is tied to pages which no longer exist.

Today we released six new backlink reports to help you identify issues and monitor the health of your backlinked pages.

New Backlink Reports

Broken Pages with Backlinks

Broken pages with backlinks are potentially the most severe, yet easiest to fix backlink issue. This is normally caused by URLs which were linked to useful pages in the past, but those destination pages no longer exist. This happens for several reasons: products which were discontinued; legacy URL structures which were lost during a site migration; and pages which have been renamed or moved around the site.

Not only does the PageRank/link equity to these pages get completely lost, but so do any users who happen to follow those backlinks.

This report will show you every URL which has backlinks, but returns a 4xx / 5xx status code. You should address this issue by either setting up redirects to a relevant page, or restoring the page to bring it back to life.

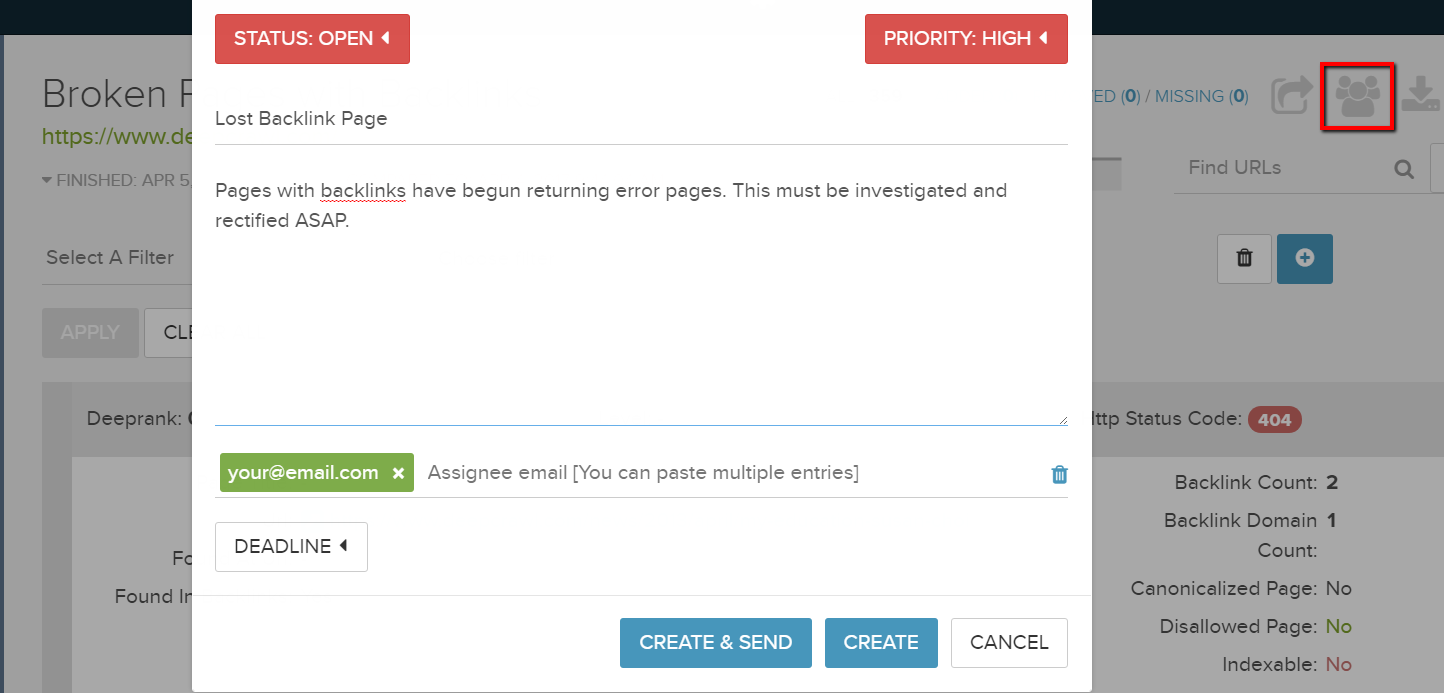

Set up a lost backlink landing page alert:

You can be instantly notified of any backlinked URLs which begin to return an error page. Set up a task for this report, and we’ll notify you of any changes to this report the next time we crawl your website. Find out what’s been added, removed or is missing, all in one place.

Disallowed URLs with Backlinks

This report shows URLs which have backlinks but are block crawling due to your robots.txt file. As with error pages, search engines are unable to pass any PageRank or link equity to these types of pages. This report is a quick way for you to make sure your best backlinks aren’t accidentally being blocked, causing you to miss ranking opportunities.

Redirecting URLs with Backlinks

Redirecting URLs which have backlinks are not necessarily negative: there are several instances where this would be the correct setup. However, it is important to review these redirecting pages to ensure that they are sending users and link equity to the right pages.

Non-indexable Pages with Backlinks

Some of your backlinked pages may be non-indexable – they could be canonicalised to another URL, or have the ‘noindex’ robots directive. These pages are not strictly a problem, but are often an opportunity.

Canonicalised pages may have gained backlinks because they are usefulto users, but were not discoverable in search. It is worth reconsidering whether these pages should be indexable. For instance, if your “Silk Dresses” page canonicalises to your main “Dresses” page but websites are linking to it, it makes sense that search engines may also want to send users there.

Pages with backlinks but no links out, or are nofollowed

This report keeps track of your backlinked pages which have no links to other pages, or contain the meta nofollow tag. To help users find other parts of your website and let search engines pass PageRank/link equity, your backlinked pages should contain links to other pages. Solving this issue can be as simple as a link to your homepage, or as comprehensive as including your core navigation bar. A meta nofollow tag creates the same effect as having no links – search engines are unable to pass link equity to the rest of your site.

Adding Backlink Data To Reports

We support uploads of backlinks from several sources and backlink profiles. See supported backlink tools.