Chapter 2: Building a Culture of SEO

Once you’ve begun the process of building a culture of SEO and educating your company about its importance, you’ll need to analyze your website to make sure that it is technically sound and won’t impede any SEO campaign efforts through poor optimization.

The solution to the challenge of scale is always having a wide angle view of the total picture of the organization and SEO performance. Utilizing tools that support this wide view are a huge help for traffic metrics and for the human challenges, it is vital to build relationships across the entire organization. Coupled with this wide view, it is critical to employ a process of ruthless prioritization and don’t get stuck in the weeds.

For example, fixing every 404 on a huge site is akin to playing arcade whack-a-mole, and it is far more important to know what creates the 404’s and prevent a recurrence. Similarly if there is a rogue engineer or product manager that insists on doing things their way, it is not always necessary to prove them wrong and bring them into the fold. My experience in enterprise SEO has taught me that it is always better to win the war than win the battle.

In this section of the guide, we’ll explore the methods you can use for analyzing your website and collecting meaningful data that can help inform your SEO strategy, even for the largest websites. We’ll also look at how you can use this data to build a strong technical foundation for your site to ensure success in the long run.

Effective crawl management

Before any meaningful optimization work can take place, you’ll need a detailed picture of your website’s technical health. This can be difficult to achieve, however, due to the size of enterprise sites and the time it can take to crawl them and process all of their data.

However, being able to run smaller, more regular targeted crawls means that SEOs managing even the largest sites won’t have to wait around before they can start building their strategy; they’ll be able to get their data much more quickly meaning that they’ll be able to be much more agile.

The method of tactical crawling is based on the idea that you don’t need to crawl every single URL of a site every time. You only need to gather enough data to validate issues. With tactical, targeted crawling methods, you can use segments to build a representative picture of site health as a whole.

There are two main methods of tactical crawling: sampling and slicing.

Collecting data by sampling

By sampling data, the aim is to get a smaller percentage that’s representative of the entire website. Here are some methods for doing so:

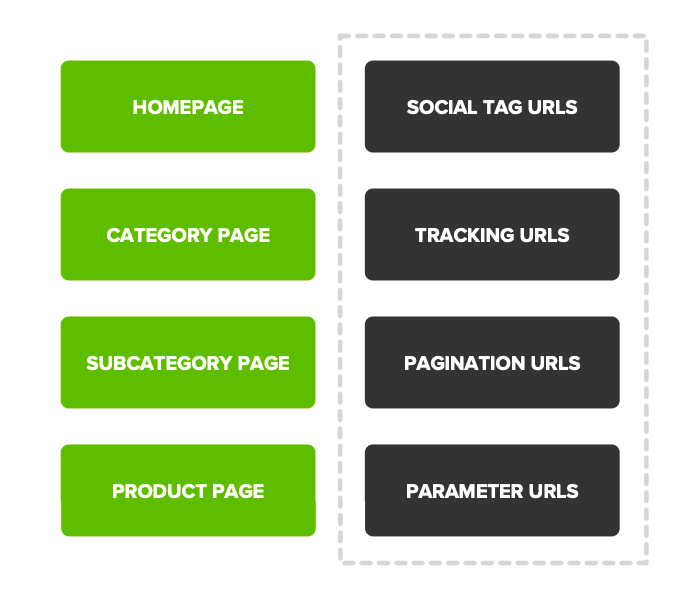

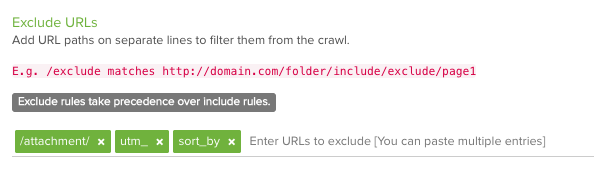

1. Start a small crawl of around 10,000 URLs and find areas of crawl waste to exclude for the whole crawl. E.g. UTM tracking and parameter URLs.

Source: Lumar

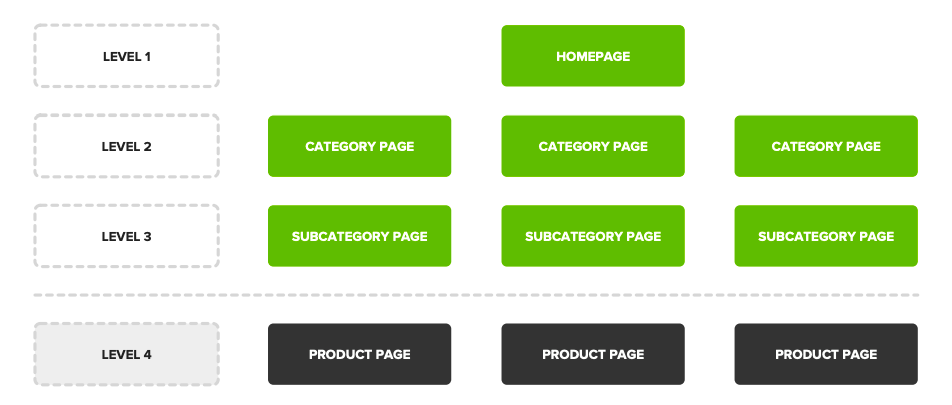

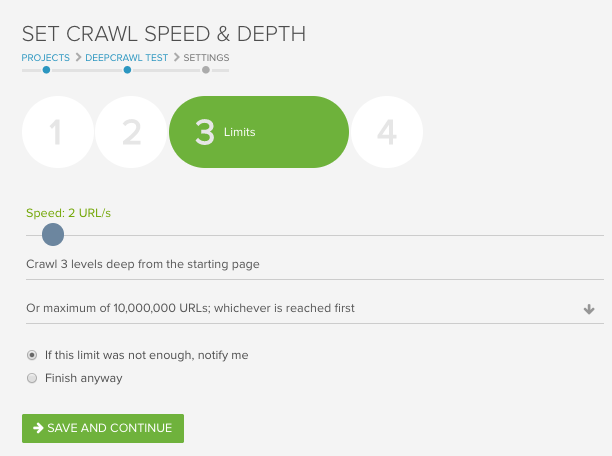

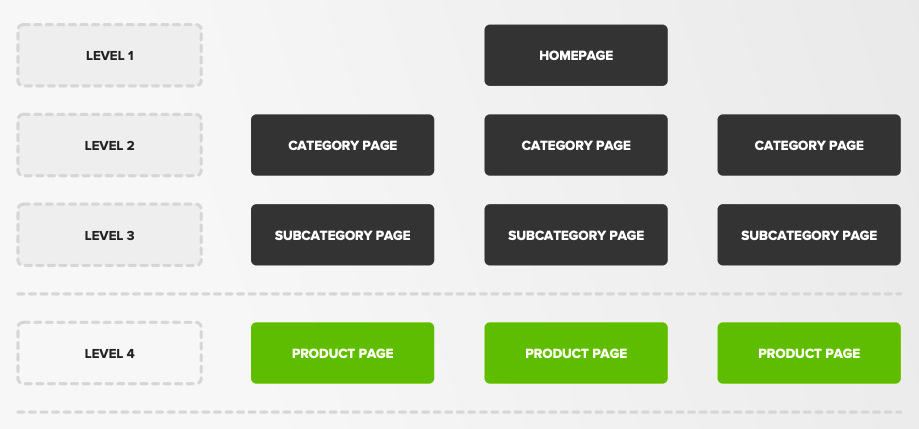

2. Use level limitation to assess site breadth without getting lost in the weeds. E.g. only crawl up to 3 levels deep.

Source: Lumar

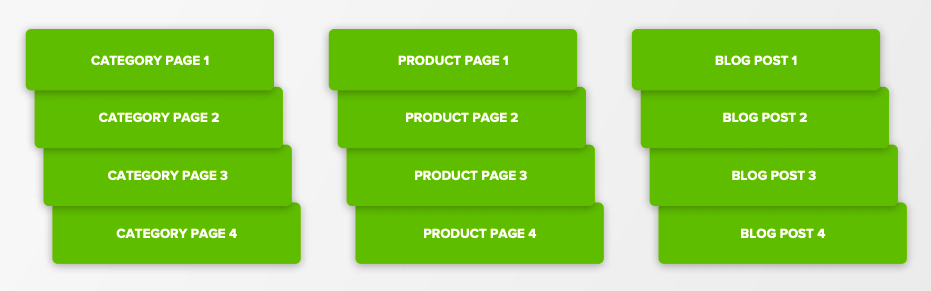

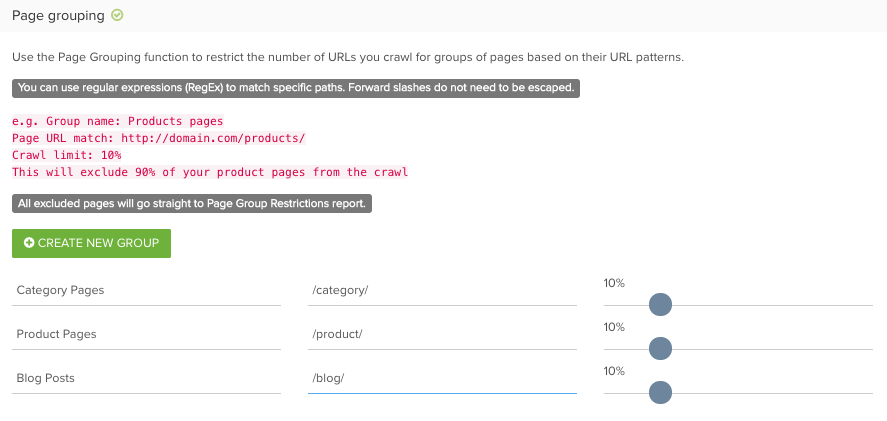

3. Crawl a certain number of examples of each page type to analyze page templates. E.g. crawl a handful of category pages, product pages, and blog posts.

Source: Lumar

Collecting data by slicing

When you slice data you are looking to get a smaller, distinct section in isolation rather than a percentage of the site as a whole. Here are some methods for doing so:

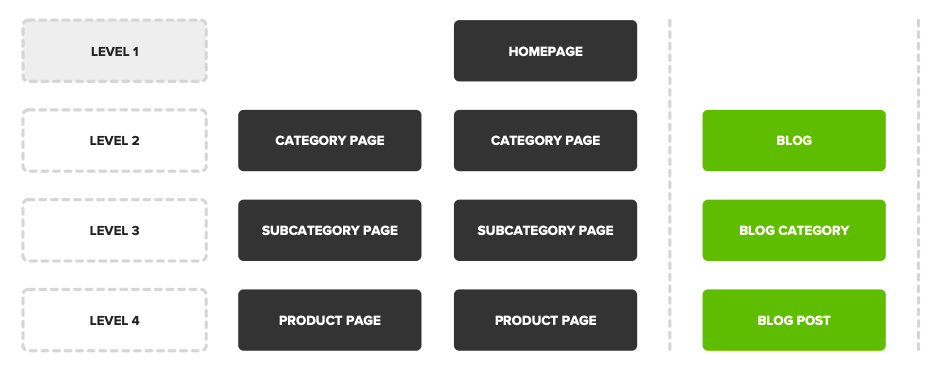

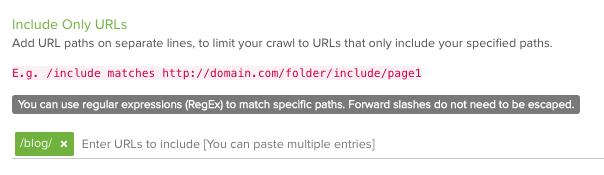

1. Crawl a distinct section that serves a separate function. E.g. the blog or a separate mobile site.

Source: Lumar

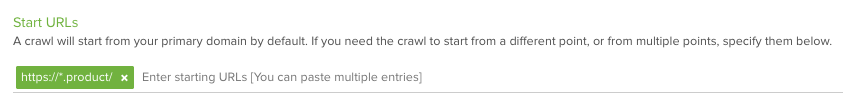

2. Crawl a horizontal slice of one subsection of the website. E.g. all of the products but none of the categories.

Source: Lumar

Try out these tactical crawling methods with Lumar

These tactical crawling methods allow you to create an agile solution for website crawling which allows you to be flexible and proactive when managing even the largest websites. This comes in handy when you need to gather data to build an SEO project proposal quickly, or if you need to be able to prioritize the biggest issues to tackle first within your workload at short notice.

Tactical crawling also allows you to get to know your website and its structure better. Once you establish patterns such as internal linking between key page sets, you no longer need to crawl everything because you understand the overall structure. This allows you to build up your own process that works for you and your site and crawl more efficiently in the future.

Use tactical crawling methods to get faster insights into your site’s technical health, which will allow you to be more agile and proactive in your work.

Remember to also run annual or semi-annual full crawls and tech SEO audits, as the data from these will form the primary baseline for planning any tactical crawls.

Now that you’ve got some advice on crawling your own site, here are some tips on ensuring better crawl efficiency for the search engines visiting your site:

- Use robots.txt or directives like noindex to control which of these pages are available for crawling and indexing.

- Implement canonical tags to consolidate rankings for duplicate URLs.

- Have sitemaps that only include indexable pages to make sure search engines are being sent up-to-date pages to crawl.

- Make sure only necessary faceted pages that provide value to users are indexable.

Google only needs to crawl facet pages that include otherwise unlinked products or content.

-John Mueller, Google Webmaster Hangout

Segmentation & targeted analysis

The key to better understanding the technical health of an enterprise site and gauging the success of particular fixes is to break it down into smaller, more manageable segments. Categorizing a website can help you better analyze and understand it.

A website should be able to be split into around 5-7 categories, such as:

- Product pages

- Category pages

- Blog pages

- Vanity pages

- Navigational pages

- Transactional pages

- FAQ pages

Categorizing a site for internal purposes isn’t enough, this should also be reflected by a consistent site structure with understandable URL paths and subfolders, such as /products/ or /articles/, to allow for easy grouping, crawling and analysis.

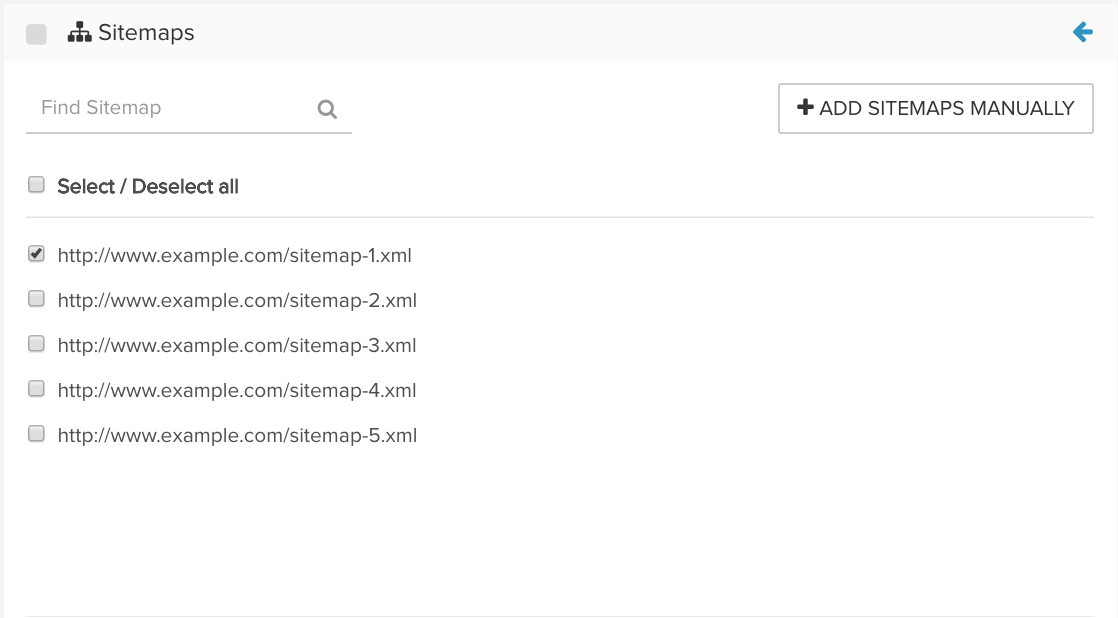

This makes it more straightforward to be able to segment data in sitemaps:

Break up large sitemaps into smaller sitemaps to prevent your server from being overloaded if Google requests your sitemap frequently. A sitemap file can’t contain more than 50,000 URLs and must be no larger than 50 MB uncompressed.

Source: Lumar

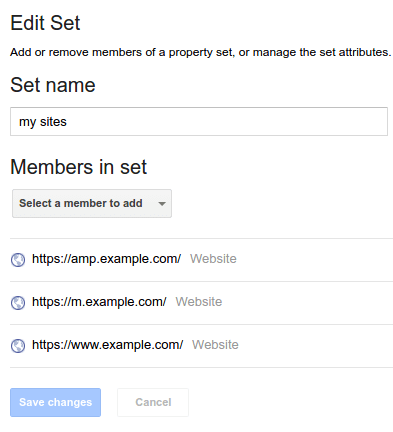

You can also segment data within external sources such as log file tools, Google Analytics and Google Search Console, by creating custom properties and inclusion rules to analyze particular website categories:

Source: Google Search Console

Another use of segmentation for website analysis is to select subsets of pages for close analysis. This involves selecting a handful of pages from different categories on your site which have:

- A strong history of organic traffic.

- Consistent levels of user engagement.

- Close monitoring and documentation of all changes made.

By analyzing small groups of pages like this and carefully documenting key metrics and any changes made to them on an ongoing basis, you can drown out the noise of the rest of the site and start to be able to identify fixes that bring about performance increases.

When you see the needle move for these pages this will be much more meaningful because you will be able to more accurately attribute performance improvements back to changes that you have made. In a small way, this can help you prove the value of SEO fixes.

Better understand enterprise sites by breaking them down into smaller segments, as this allows you to drill down and start spotting more trends and opportunities.

You can then test out implementing changes that saw positive results on more subsets of pages. It’s important to roll out changes gradually across enterprise sites to minimize ranking fluctuations and any impact on performance.

It’s fine to make large scale site changes all at once, but this can cause bigger SERP fluctuations than phased changes.

-John Mueller, Google Webmaster Hangout

Building solid technical foundations

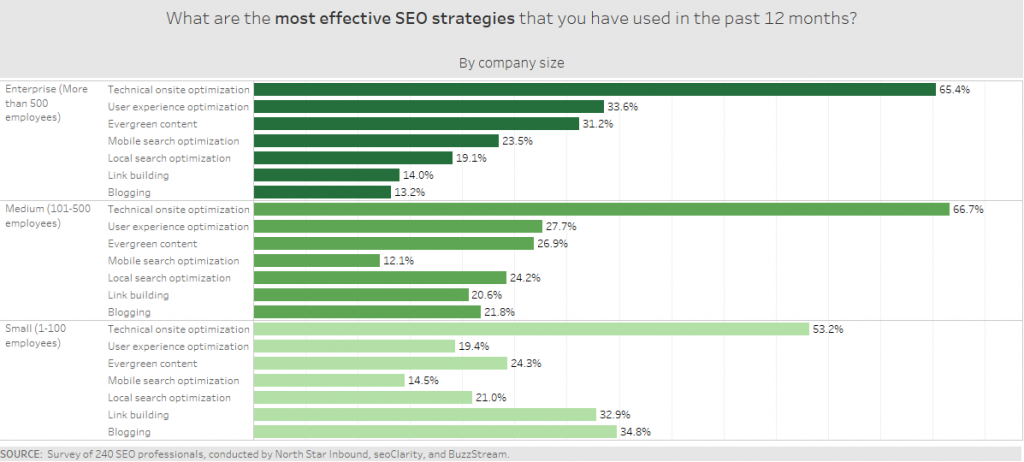

Technical on-site optimization should be at the forefront of any enterprise SEO strategy, and this should form the foundations upon which you can then build campaigns.

Source: Search Engine Watch

Three crucial elements that should form the technical foundation of any enterprise site are internal linking and site architecture, site speed and web performance, and internationalization. However, these are also elements that enterprise sites struggle the most with. This is something that the Lumar team has seen from working with our biggest enterprise clients.

- Internal linking & site architecture: This is of the most powerful elements that enterprise sites can leverage to increase crawlability of important pages, improve navigation for users, and pass PageRank to important pages that you want to rank in SERPs.

- Site speed & web performance: Site speed sits at the top of the UX hierarchy in terms of what users want most from a website, however, it can be a huge challenge to optimize the performance of enterprise sites.

- Internationalization: Global websites need to be able to reach and speak to wide international audiences, but this is only possible through the correct targeting and localization.

Success in enterprise SEO is not something that can be only measured through your website ‘s ranking. It is also based on the foundation of your SEO efforts, which is the structure of the website, multilingual optimization, scalability, automation and accessibility of the content since the website consists of million pages. Therefore, good indexing and accessibility are important points of concern for enterprise SEO.

That’s why we’ve put together comprehensive white papers on each of these topics so you can fully understand their importance and how you can make sure your website has a fully optimised technical foundation that will put you in good stead for the SEO campaigns you’ll go on to launch. Get the technical side in order first so you have a solid foundation to go after your tactical goals around long-term ranking strategies.

Read our guide to site architecture optimization

Read our guide to international SEO

Chapter 4: Getting SEO Fixes Implemented in Enterprise Businesses